Adobe Stock: The Best Stock Photo Provider (Not Only) For Creative Cloud Users

Last year, Adobe started their image service Adobe Stock with multiple millions of royalty-free photos, illustrations, and videos. This happened with Adobe having purchased stock photo provider Fotolia, thus paving the way for Adobe’s image service. Adobe Stock has become a firm part of the Creative Cloud. But what exactly is Adobe Stock capable of? Where are the advantages over Fotolia and other services? What are the prices of the images and how big is the assortment?

50 Million Images from Fotolia

Because Adobe bought Fotolia, Adobe Stock was able to start immediately with a wide variety of photos on offer. The entire Fotolia “Standard Collection” is part of Adobe Stock’s assortment. This includes around 50 million photos and illustrations. Recently, the videos of the “Standard Collection” were added as well.

However, the “Infinite Collection”, which contains material from renowned photo agencies, as well as the “Instant Collection” with photos taken via smartphone, remain exclusive to Fotolia.

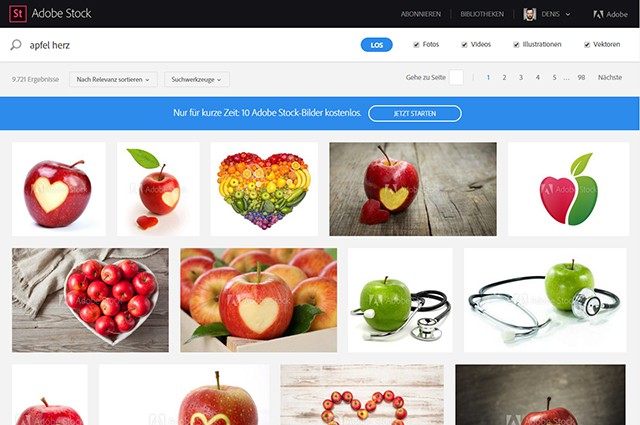

Search on the Adobe Stock Website

When searching for suitable material, you can filter the results and only have photos, illustrations, videos or vector graphics be displayed. Furthermore, there are additional search filters to only show a certain image orientation – upright or horizontal format – or color. You can also specifically search for images with people or without people on them. In addition to that, there are pre-set categories that help you limit the results when searching so that you only find suitable images.

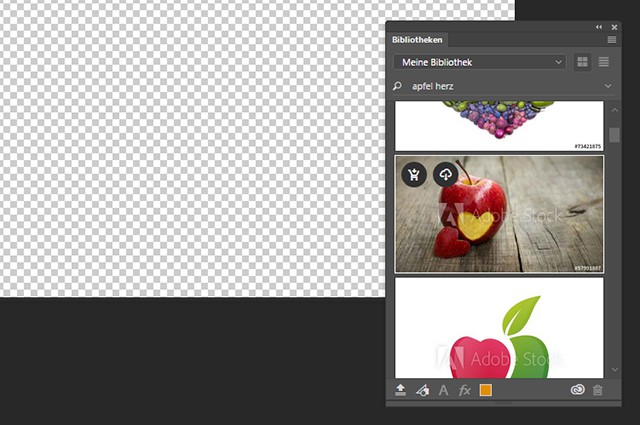

Using Adobe Stock Directly from the Creative Cloud

The unique thing about Adobe Stock is its integration into the Creative Cloud. This way, you have direct access to Adobe Stock in Photoshop, InDesign, and other Creative Cloud applications. The libraries, introduced in 2015, allow you to save assets such as colors, formats and graphics.

The Creative Cloud synchronizes everything that has been collected in the libraries so that all assets are available for all applications. You can use these libraries to search for photos in Adobe Stock, and you can add fit photos as a preview directly within your libraries.

The Search Via Libraries in Photoshop

You also have the option to directly deposit photos as a preview in one of your Creative Cloud libraries when searching on the Adobe Stock website. Alternatively, you can just download preview images to your computer. All preview images contain a watermark and are only available in low resolution. In contrast to the Fotolia preview images, however, the resolution and quality of Adobe Stock previews is significantly higher.

Comparison of the Preview Images of Adobe Stock and Fotolia

While the preview images of Fotolia are barely ever usable to realize presentable drafts with, the ones from Adobe Stock have a significantly better resolution and are available in high quality. This is a significant advantage over Fotolia.

Simple and Fast Workflow

Images that you save into your library directly from Adobe Stock are available as a linked smart object. You can edit these images in Photoshop and apply filters or corrections, for example. However, tools like the eraser or the copy stamp are not available. To use these, you have to rasterize the smart object.

A Linked Smart Object Placed in Photoshop

The linked smart object has more advantages, however. As soon as you license the used image, Adobe Stock turns the preview image into a high-resolution image and removes the watermark. Thus, you don’t need to manually replace the preview image. This eases the workflow and saves a good amount of time. Wherever you used the preview image, including all files and applications, it will be replaced with the licensed image.

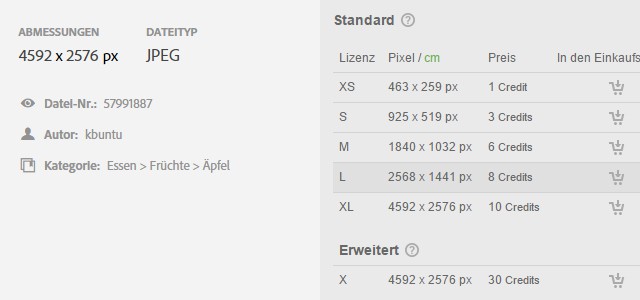

Simple Licensing Model

While you need to decide on different licenses for every photo when using Fotolia, Adobe Stock offers a very simple model. Instead of providing pictures in various resolutions with various licensing – for Fotolia, there are six standard licenses and one extended license -, Adobe Stock only offers one license which provides the image in the highest resolution possible.

The Adobe Stock license is equal to Fotolia’s Standard license in its highest resolution

When using Adobe Stock, you also don’t pay with credits. A single Adobe Stock image costs 9,99 Euro by default. There is a monthly subscription for Creative Cloud users that offers ten photos for 29,99 Euro. The standard price of this subscription is 49,99 Euro for those who are not subscribed to the Creative Cloud.

Comparison of Adobe Stock’s and Fotolia’s Licensing Models

A price comparison between Fotolia and Adobe Stock is difficult as the credits that you need to purchase to buy images on Fotolia have different prices. One Fotolia credit costs between 1,35 Euro and 74 Cent, depending on how many you buy. You need to choose the XL or XXL license when buying a full-resolution image on Fotolia. Some photos are only available as an XL license. Most of the time, you’ll pay ten credits for an XL license which is between 13,50 and 7,40 Euro. With Adobe Stock, you always pay 9,99 Euro without a subscription.

Those that require photos on a regular basis get a good deal with their Creative Cloud subscription. Here, you’ll pay 2,99 Euro per photo. By the way, it is also possible to buy more than ten photos a month when using the subscription that’s 29,99 Euro a month. Every additional photo will only cost 2,99 Euro as well.

Conclusion

Adobe Stock has some decisive advantages over other stock providers. The close connection to the Creative Cloud creates a fast and simple workflow. The preview images have a much better quality than the Fotolia ones and the unique subscription for Creative Cloud users guarantees photos for a bargain price. That means that there is enough reason to give Adobe Stock a try. For CC users, it’s a no-brainer anyway…

(dpe)

- A Life Without Adobe: Possible, but Pointless (IMHO)

Snap That Chance: Royalty-free Stock Photos for Less than One Dollar…

Snap That Chance: Royalty-free Stock Photos for Less than One Dollar… Deal of the Week: 2 Million Stock Images for a Fraction of Their…

Deal of the Week: 2 Million Stock Images for a Fraction of Their… Adobe Portfolio: New Website Builder for Creatives

Adobe Portfolio: New Website Builder for Creatives Deal of the Week: Millions of Radically Priced Stock Photos

Deal of the Week: Millions of Radically Priced Stock Photos Deal of the Week: Small Money for Big Stock Photos

Deal of the Week: Small Money for Big Stock Photos