Voice-Controlled Web Visualizations with Vue.js and Machine Learning

In this tutorial, we’ll pair Vue.js, three.js and LUIS (Cognitive Services) to create a voice-controlled web visualization.

But first, a little context

Why would we need to use voice recognition? What problem might something like this solve?

A while ago I was getting on a bus in Chicago. The bus driver didn’t see me and closed the door on my wrist. As he started to go, I heard a popping sound in my wrist and he did eventually stop as the other passengers started yelling, but not before he ripped a few tendons in my arm.

I was supposed to take time off work but, typical for museum employees at that time, I was on contract and had no real health insurance. I didn’t make much to begin with so taking time off just wasn’t an option for me. I worked through the pain. And, eventually, the health of my wrist started deteriorating. It became really painful to even brush my teeth. Voice-to-text wasn’t the ubiquitous technology that it is today, and the best tool then available was Dragon. It worked OK, but was pretty frustrating to learn and I still had to use my hands quite frequently because it would often error out. That was 10 years ago, so I’m sure that particular tech has improved significantly since then. My wrist has also improved significantly in that time.

The whole experience left me with a keen interest in voice-controlled technologies. What can we do if we can control the behaviors of the web in our favor, just by speaking? For an experiment, I decided to use LUIS, which is a machine learning-based service to build natural language through the use of custom models that can continuously improve. We can use this for apps, bots, and IoT devices. This way, we can create a visualization that responds to any voice — and it can improve itself by learning along the way.

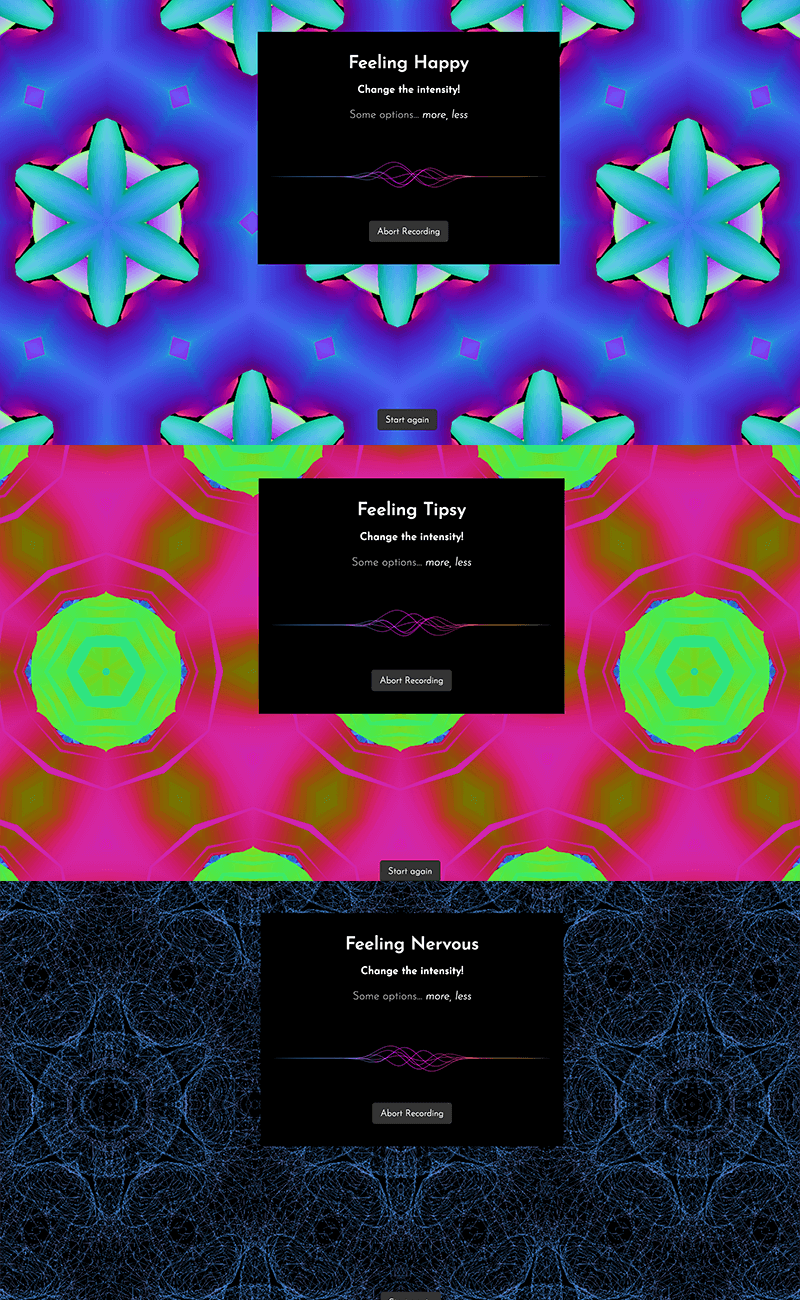

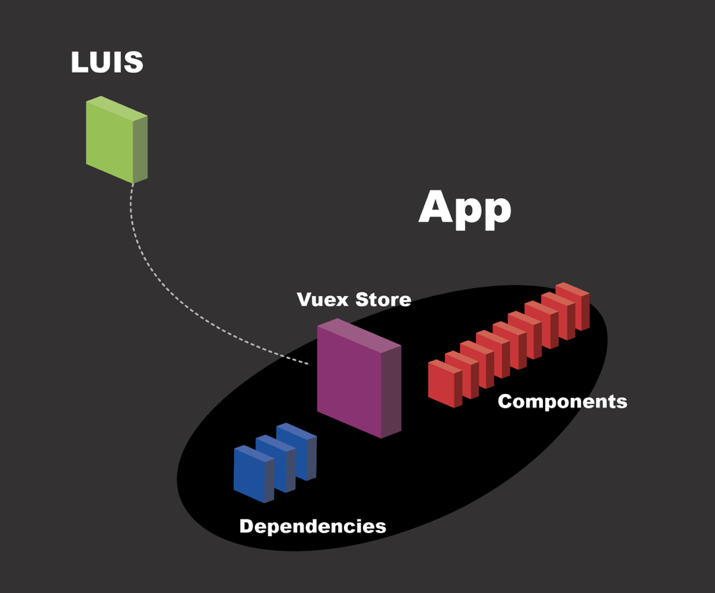

Here’s a bird’s eye view of what we’re building:

Setting up LUIS

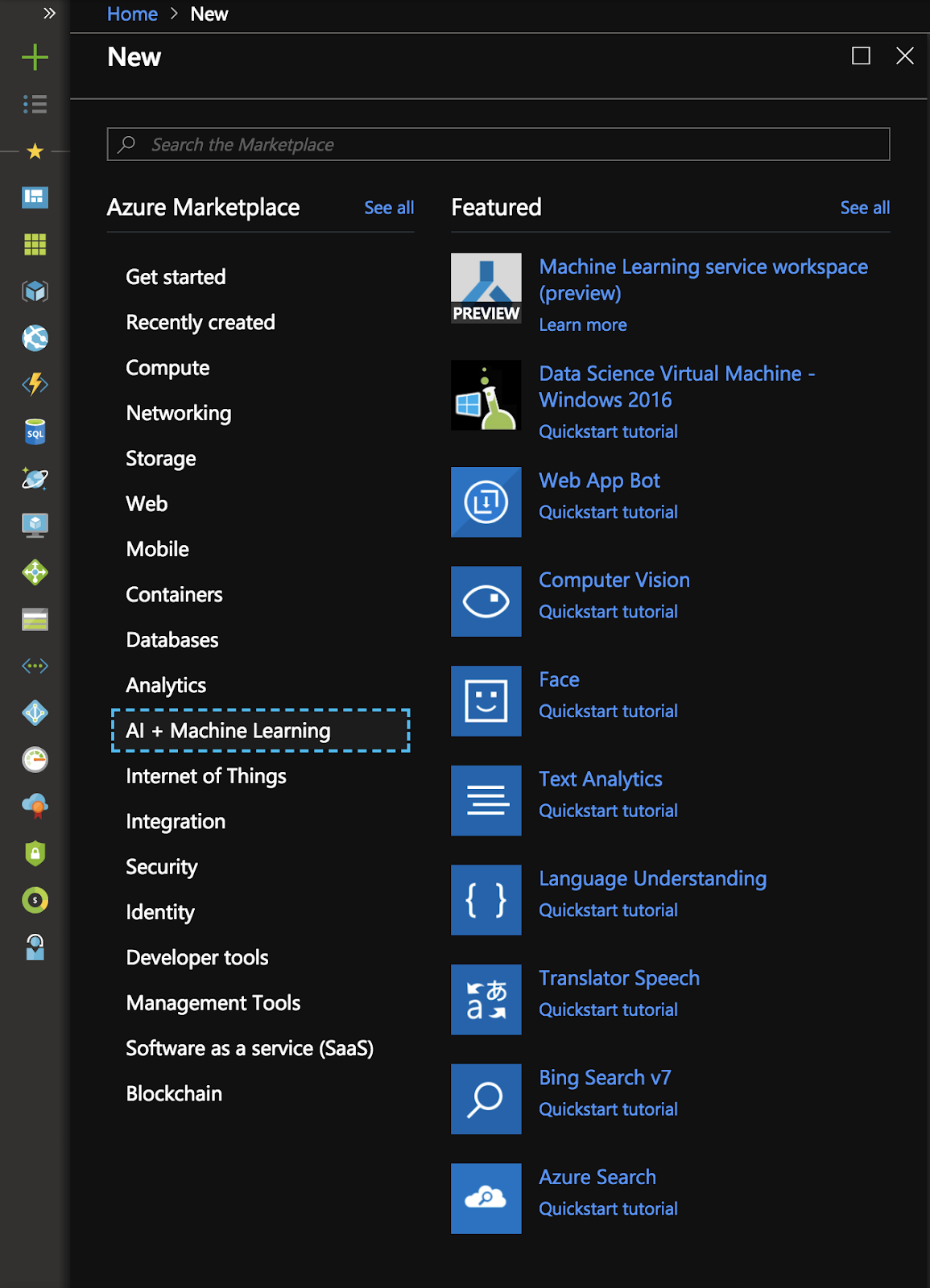

We’ll get a free trial account for Azure and then go to the portal. We’ll select Cognitive Services.

After picking New ? AI/Machine Learning, we’ll select “Language Understanding” (or LUIS).

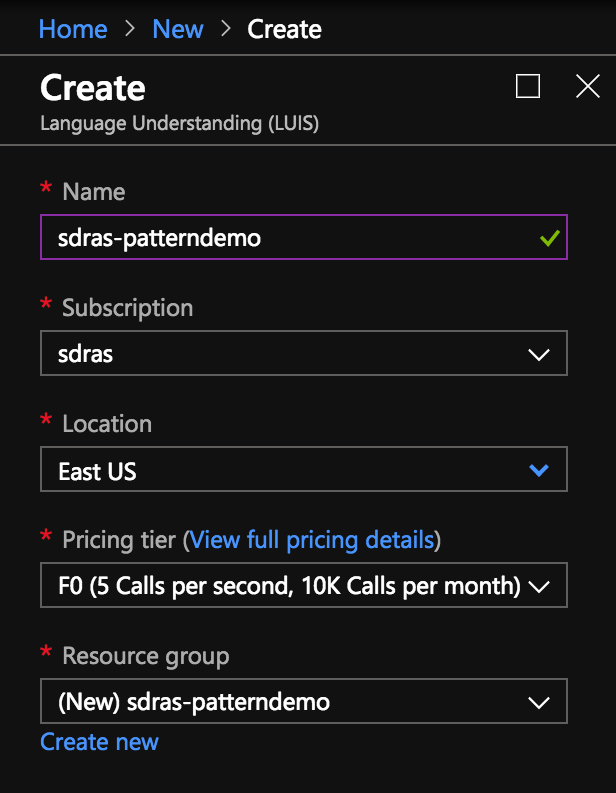

Then we’ll pick out our name and resource group.

We’ll collect our keys from the next screen and then head over to the LUIS dashboard

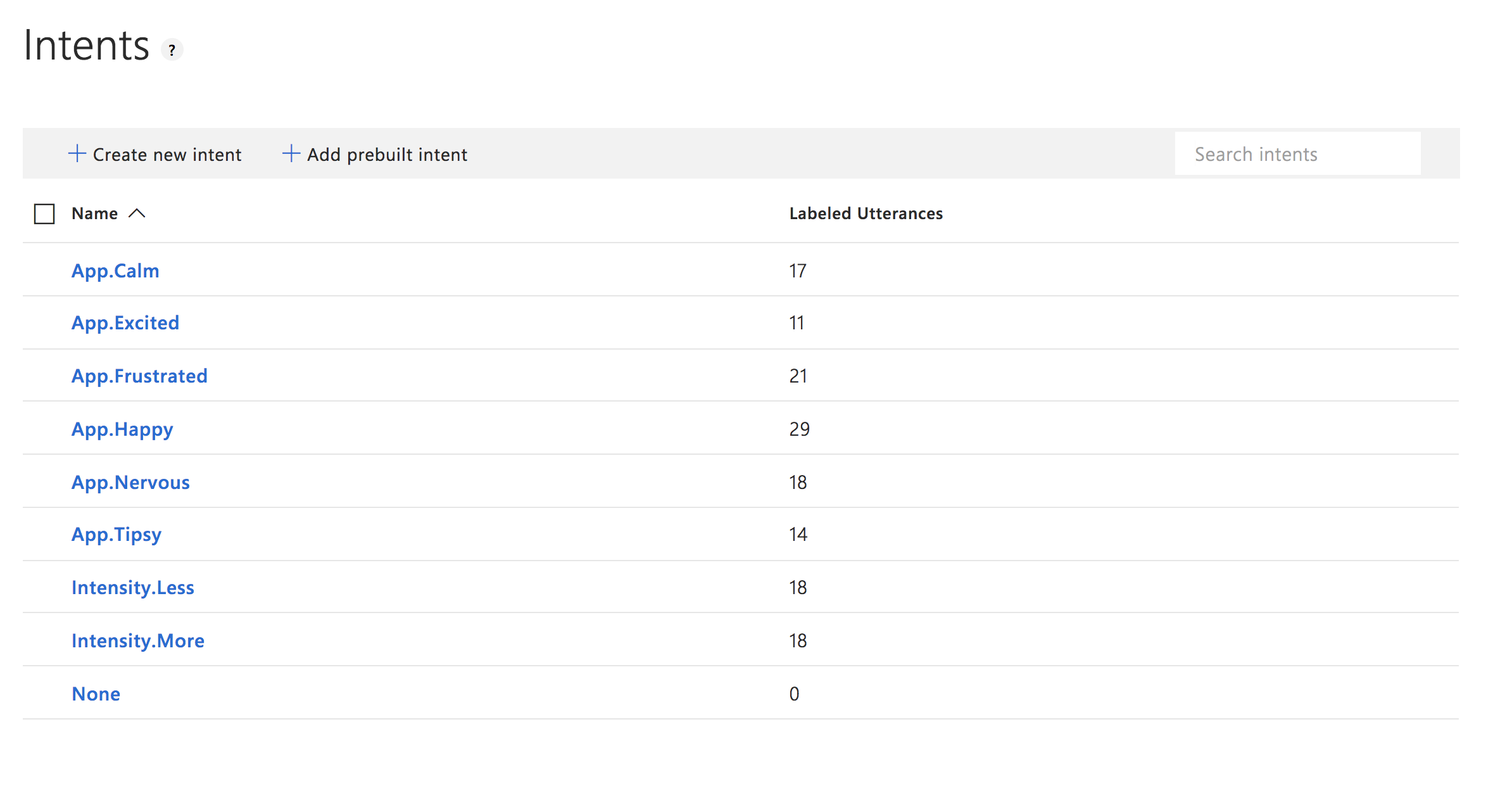

It’s actually really fun to train these machines! We’ll set up a new application and create some intents, which are outcomes we want to trigger based on a given condition. Here’s the sample from this demo:

You may notice that we have a naming schema here. We do this so that it’s easier to categorize the intents. We’re going to first figure out the emotion and then listen for the intensity, so the initial intents are prefixed with either App (these are used primarily in the App.vue component) or Intensity.

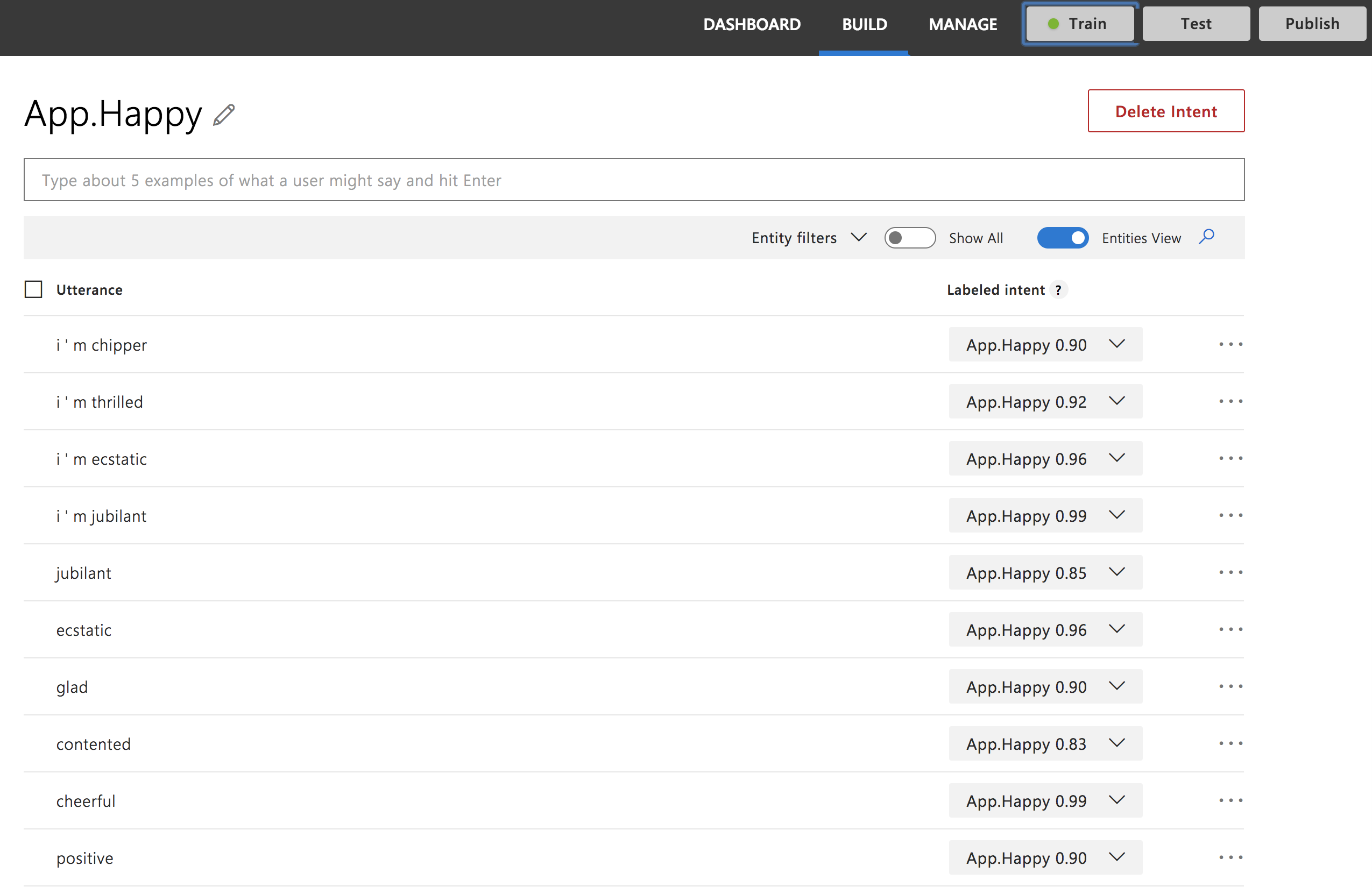

If we dive into each particular intent, we see how the model is trained. We have some similar phrases that mean roughly the same thing:

You can see we have a lot of synonyms for training, but we also have the “Train” button up top for when we’re ready to start training the model. We click that button, get a success notification, and then we’re ready to publish. ?

Setting up Vue

We’ll create a pretty standard Vue.js application via the Vue CLI. First, we run:

vue create three-vue-pattern

# then select Manually...

Vue CLI v3.0.0

? Please pick a preset:

default (babel, eslint)

❯ Manually select features

# Then select the PWA feature and the other ones with the spacebar

? Please pick a preset: Manually select features

? Check the features needed for your project:

◉ Babel

◯ TypeScript

◯ Progressive Web App (PWA) Support

◯ Router

◉ Vuex

◉ CSS Pre-processors

◉ Linter / Formatter

◯ Unit Testing

◯ E2E Testing

? Pick a linter / formatter config:

ESLint with error prevention only

ESLint + Airbnb config

❯ ESLint + Standard config

ESLint + Prettier

? Pick additional lint features: (Press <space> to select, a to toggle all, i to invert selection)

❯ ◉ Lint on save

◯ Lint and fix on commit

Successfully created project three-vue-pattern.

Get started with the following commands:

$ cd three-vue-pattern

$ yarn serveThis will spin up a server for us and provide a typical Vue welcome screen. We’ll also add some dependencies to our application: three.js, sine-waves, and axios. three.js will help us create the WebGL visualization. sine-waves gives us a nice canvas abstraction for the loader. axios will allow us a really nice HTTP client so we can make calls to LUIS for analysis.

yarn add three sine-waves axiosSetting up our Vuex store

Now that we have a working model, let’s go get it with axios and bring it into our Vuex store. Then we can disseminate the information to all of the different components.

In state, we’ll store what we’re going to need:

state: {

intent: 'None',

intensity: 'None',

score: 0,

uiState: 'idle',

zoom: 3,

counter: 0,

},intent and intensity will store the App, intensity, and intents, respectively. The score will store our confidence (which is a score from 0 to 100 measuring how well the model thinks it can rank the input).

For uiState, we have three different states:

idle– waiting for the user inputlistening– hearing the user inputfetching– getting user data from the API

Both zoom and counter are what we’ll use to update the data visualization.

Now, in actions, we’ll set the uiState (in a mutation) to fetching, and we’ll make a call to the API with axios using the generated keys we received when setting up LUIS.

getUnderstanding({ commit }, utterance) {

commit('setUiState', 'fetching')

const url = `https://westus.api.cognitive.microsoft.com/luis/v2.0/apps/4aba2274-c5df-4b0d-8ff7-57658254d042`

https: axios({

method: 'get',

url,

params: {

verbose: true,

timezoneOffset: 0,

q: utterance

},

headers: {

'Content-Type': 'application/json',

'Ocp-Apim-Subscription-Key': ‘XXXXXXXXXXXXXXXXXXX'

}

})Then, once we’ve done that, we can get the top-ranked scoring intent and store it in our state.

We also need to create some mutations we can use to change the state. We’ll use these in our actions. In the upcoming Vue 3.0, this will be streamlined because mutations will be removed.

newIntent: (state, { intent, score }) => {

if (intent.includes('Intensity')) {

state.intensity = intent

if (intent.includes('More')) {

state.counter++

} else if (intent.includes('Less')) {

state.counter--

}

} else {

state.intent = intent

}

state.score = score

},

setUiState: (state, status) => {

state.uiState = status

},

setIntent: (state, status) => {

state.intent = status

},This is all pretty straightforward. We’re passing in the state so that we can update it for each occurrence — with the exception of Intensity, which will increment the counter up and down, accordingly. We’re going to use that counter in the next section to update the visualization.

.then(({ data }) => {

console.log('axios result', data)

if (altMaps.hasOwnProperty(data.query)) {

commit('newIntent', {

intent: altMaps[data.query],

score: 1

})

} else {

commit('newIntent', data.topScoringIntent)

}

commit('setUiState', 'idle')

commit('setZoom')

})

.catch(err => {

console.error('axios error', err)

})In this action, we’ll commit the mutations we just went over or log an error if something goes wrong.

The way that the logic works, the user will do the initial recording to say how they’re feeling. They’ll hit a button to kick it all off. The visualization will appear and, at that point, the app will continuously listen for the user to say less or more to control the returned visualization. Let’s set up the rest of the app.

Setting up the app

In App.vue, we’ll show two different components for the middle of the page depending on whether or not we’ve already specified our mood.

<app-recordintent v-if="intent === 'None'" />

<app-recordintensity v-if="intent !== 'None'" :emotion="intent" />Both of these will show information for the viewer as well as a SineWaves component while the UI is in a listening state.

The base of the application is where the visualization will be displayed. It will show with different props depending on the mood. Here are two examples:

<app-base

v-if="intent === 'Excited'"

:t-config.a="1"

:t-config.b="200"

/>

<app-base

v-if="intent === 'Nervous'"

:t-config.a="1"

:color="0xff0000"

:wireframe="true"

:rainbow="false"

:emissive="true"

/>Setting up the data visualization

I wanted to work with kaleidoscope-like imagery for the visualization and, after some searching, found this repo. The way it works is that a shape turns in space and this will break the image apart and show pieces of it like a kaleidoscope. Now, that might sound awesome because (yay!) the work is done, right?

Unfortunately not.

There were a number of major changes that needed to be done to make this work, and it actually ended up being a massive undertaking, even if the final visual expression appears similar to the original.

- Due to the fact that we would need to tear down the visualization if we decided to change it, I had to convert the existing code to use

bufferArrays, which are more performant for this purpose. - The original code was one large chunk, so I broke up some of the functions into smaller methods on the component to make it easier to read and maintain.

- Because we want to update things on the fly, I had to store some of the items as data in the component, and eventually as props that it would receive from the parent. I also included some nice defaults (

excitedis what all of the defaults look like). - We use the counter from the Vuex state to update the distance of the camera’s placement relative to the object so that we can see less or more of it and thus it becomes more and less complex.

In order to change up the way that it looks according to the configurations, we’ll create some props:

props: {

numAxes: {

type: Number,

default: 12,

required: false

},

...

tConfig: {

default() {

return {

a: 2,

b: 3,

c: 100,

d: 3

}

},

required: false

}

},We’ll use these when we create the shapes:

createShapes() {

this.bufferCamera.position.z = this.shapeZoom

if (this.torusKnot !== null) {

this.torusKnot.material.dispose()

this.torusKnot.geometry.dispose()

this.bufferScene.remove(this.torusKnot)

}

var shape = new THREE.TorusKnotGeometry(

this.tConfig.a,

this.tConfig.b,

this.tConfig.c,

this.tConfig.d

),

material

...

this.torusKnot = new THREE.Mesh(shape, material)

this.torusKnot.material.needsUpdate = true

this.bufferScene.add(this.torusKnot)

},As we mentioned before, this is now split out into its own method. We’ll also create another method that kicks off the animation, which will also restart whenever it updates. The animation makes use of requestAnimationFrame:

animate() {

this.storeRAF = requestAnimationFrame(this.animate)

this.bufferScene.rotation.x += 0.01

this.bufferScene.rotation.y += 0.02

this.renderer.render(

this.bufferScene,

this.bufferCamera,

this.bufferTexture

)

this.renderer.render(this.scene, this.camera)

},We’ll create a computed property called shapeZoom that will return the zoom from the store. If you recall, this will be updated as the user’s voice changes the intensity.

computed: {

shapeZoom() {

return this.$store.state.zoom

}

},We can then use a watcher to see if the zoom level changes and cancel the animation, recreate the shapes, and restart the animation.

watch: {

shapeZoom() {

this.createShapes()

cancelAnimationFrame(this.storeRAF)

this.animate()

}

},In data, we’re also storing some things we’ll need for instantiating the three.js scene — most notably making sure that the camera is exactly centered.

data() {

return {

bufferScene: new THREE.Scene(),

bufferCamera: new THREE.PerspectiveCamera(75, 800 / 800, 0.1, 1000),

bufferTexture: new THREE.WebGLRenderTarget(800, 800, {

minFilter: THREE.LinearMipMapLinearFilter,

magFilter: THREE.LinearFilter,

antialias: true

}),

camera: new THREE.OrthographicCamera(

window.innerWidth / -2,

window.innerWidth / 2,

window.innerHeight / 2,

window.innerHeight / -2,

0.1,

1000

),There’s more to this demo, if you’d like to explore the repo or set it up yourself with your own parameters. The init method does what you think it might: it initializes the whole visualization. I’ve commented a lot of the key parts if you’re peeping at the source code. There’s also another method that updates the geometry that’s called — you uessed it — updateGeometry. You may notice a lot of vars in there as well. That’s because it’s common to reuse variables in this kind of visualization. We kick everything off by calling this.init() in the mounted() lifecycle hook.

- Again, here is the repo if you’d like to play with the code

- You can make your own model by getting a free Azure account

- You’ll also want to check out LUIS (Cognitive Services)

It’s pretty fun to see how far you can get creating things for the web that don’t necessarily need any hand movement to control. It opens up a lot of opportunities!

The post Voice-Controlled Web Visualizations with Vue.js and Machine Learning appeared first on CSS-Tricks.