Kerning in Typography, everything you need to know

In order for messages to be clearly transmitted, many factors play an important role. Depending on the type of communication, factors such as stuttering, poor grammar, bad alignment of letters, incorrect use of punctuation marks, mumbling, and others set apart unclear and clear messages. In oral speech, diction, proper intonation, a calm tempo of spoken words, the intensity of the voice are all skills that anyone who wants to be a good communicator has to achieve. In written speech, readable caligraphy, correct alignment of letters, words, sentences, and paragraphs, will make any text accessible to the reader.

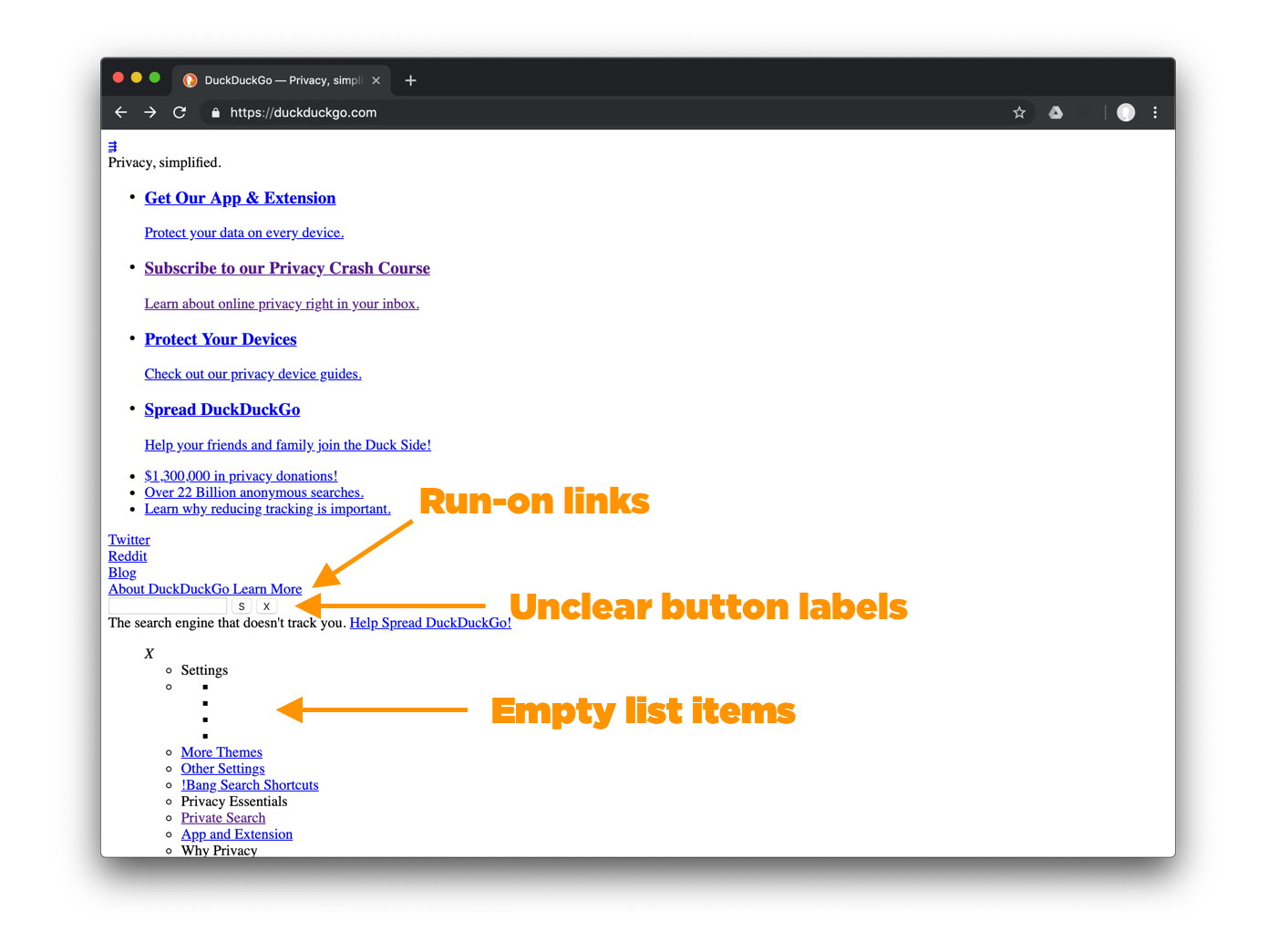

Why are all these important? Designers have a major responsibility to make the latter type of communication possible without any obstacle. There are a few notions any designer should be familiar and able to work with: Kerning, Leading, and Tracking.

What is Kerning?

Kerning: Definition

Kerning is the stylistic process that made you read the first word of this sentence “KERNING” and not “KEMING”. You’ve probably already guessed it. Kerning is the act of adjusting the space between two letters in order to avoid the irregular flow of the words and to improve legibility.

Kerning: Meaning

Back in the good old days, people used to use

Read More at Kerning in Typography, everything you need to know