Make Jamstack Slow? Challenge Accepted.

“Jamstack is slowwwww.” That’s not something you hear often, right? Especially, when one of the main selling points of Jamstack is performance. But yeah, it’s true that even a Jamstack site can suffer hits to performance just like any other site.

Don’t think that by choosing Jamstack you no longer have to think about performance. Jamstack can be fast — really fast — but you have to make the right choices. Let’s see if we can spot some of the poor decisions that can lead to a “slow” Jamstack site.

To do that, we’re going to build a really slow Gatsby site. Seems strange right? Why would we intentionally do that!? It’s the sort of thing where, if we make it, then perhaps we can gain a better understanding of what affects Jamstack performance and how to avoid bottlenecks.

We will use continuous performance testing and Google Lighthouse to audit every change. This will highlight the importance of testing every code change. Our site will start with a top Lighthouse performance score of 100. From there, we will make changes until it scores a mere 17. It is easier to do than you might think!

Let’s get started!

Creating our Jamstack site

We are going to use Gatsby for our test site. Let’s start by installing the Gatsby CLI installed:

npm install -g gatsby-cliWe can up a new Gatsby site using this command:

gatsby new slow-jamstackLet’s cd into the new slow-jamstack project directory and start the development server:

cd slow-jamstack

gatsby developTo add Lighthouse to the mix, we need a Gatsby production build. We can use Vercel to host the site, giving Lighthouse a way to runs its tests. That requires installing the Vercel command-line tool and logging in:

npm install -g vercel-cli

vercelThis will create the site in Vercel and put it on a live server. Here’s the example I’ve already set up that we’ll use for testing.

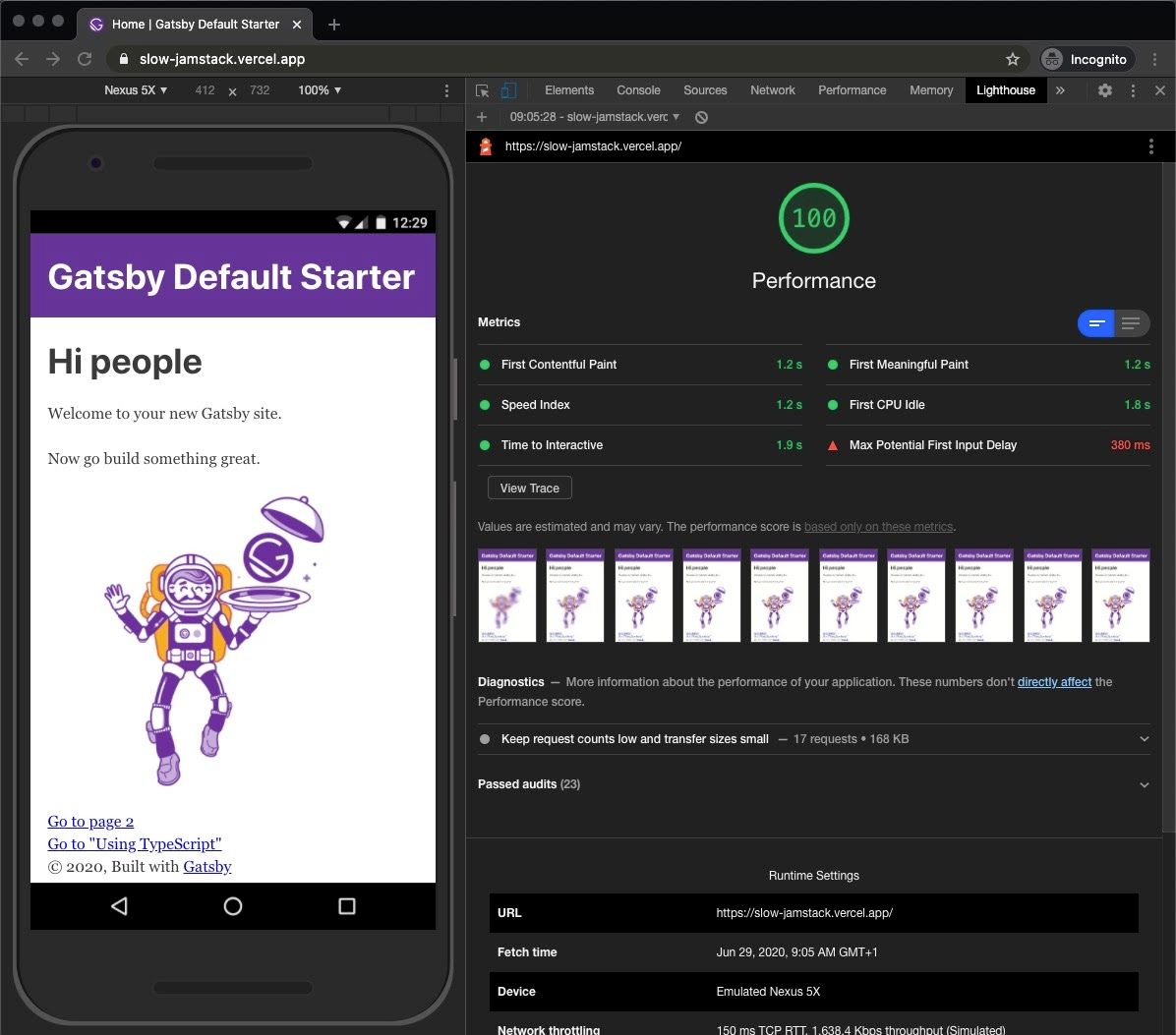

We’ve gotta use Chrome to access directly from DevTools and run a performance audit. No surprise here, the default Gatsby site is fast:

A score of 100 is the fastest you can get. Let’s see what we can do to slow it down.

Slow CSS

CSS frameworks are great. They can do a lot of heavy lifting for you. When deciding on a CSS framework use one that is modular or employs CSS-in-JS so that the only CSS you need is what’s loaded.

But let’s make the bad decision to reach for an entire framework just to style a button component. In fact, let’s even grab the heaviest framework while we’re at it. These are the sizes of some popular frameworks:

| Framework | CSS Size (gzip) |

|---|---|

| Bootstrap | 68kb (12kb) |

| Bulma | 73kb (10kb) |

| Foundation | 30kb (7kb) |

| Milligram | 10kb (3kb) |

| Pure | 17kb (4kb) |

| SemanticUI | 146kb (20kb) |

| UIKit | 33kb (6kb) |

Alright, SemanticUI it is! The “right” way to load this framework would be to use a Sass or Less package, which would allow us to choose the parts of the framework we need. The wrong way would be to load all the CSS and JavaScript files in the of the HTML. That’s what we’ll do with the full SemanticUI stylesheet. Plus, we’re going to link up jQuery because it’s a SemanticUI dependency.

We want these files to load in the head so let’s jump into the html.js file. This is not available in the src directory until we run a command to copy over the default from the cache:

cp .cache/default-html.js src/html.jsThat gives us html.js in the src directory. Open it up and add the required stylesheet and scripts:

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/semantic-ui@2.4.2/dist/semantic.css"></link>

<script src="https://code.jquery.com/jquery-3.1.1.js"></script>

<script src="https://cdn.jsdelivr.net/npm/semantic-ui@2.4.2/dist/semantic.js"></script> Now let’s push the changes straight to our production URL:

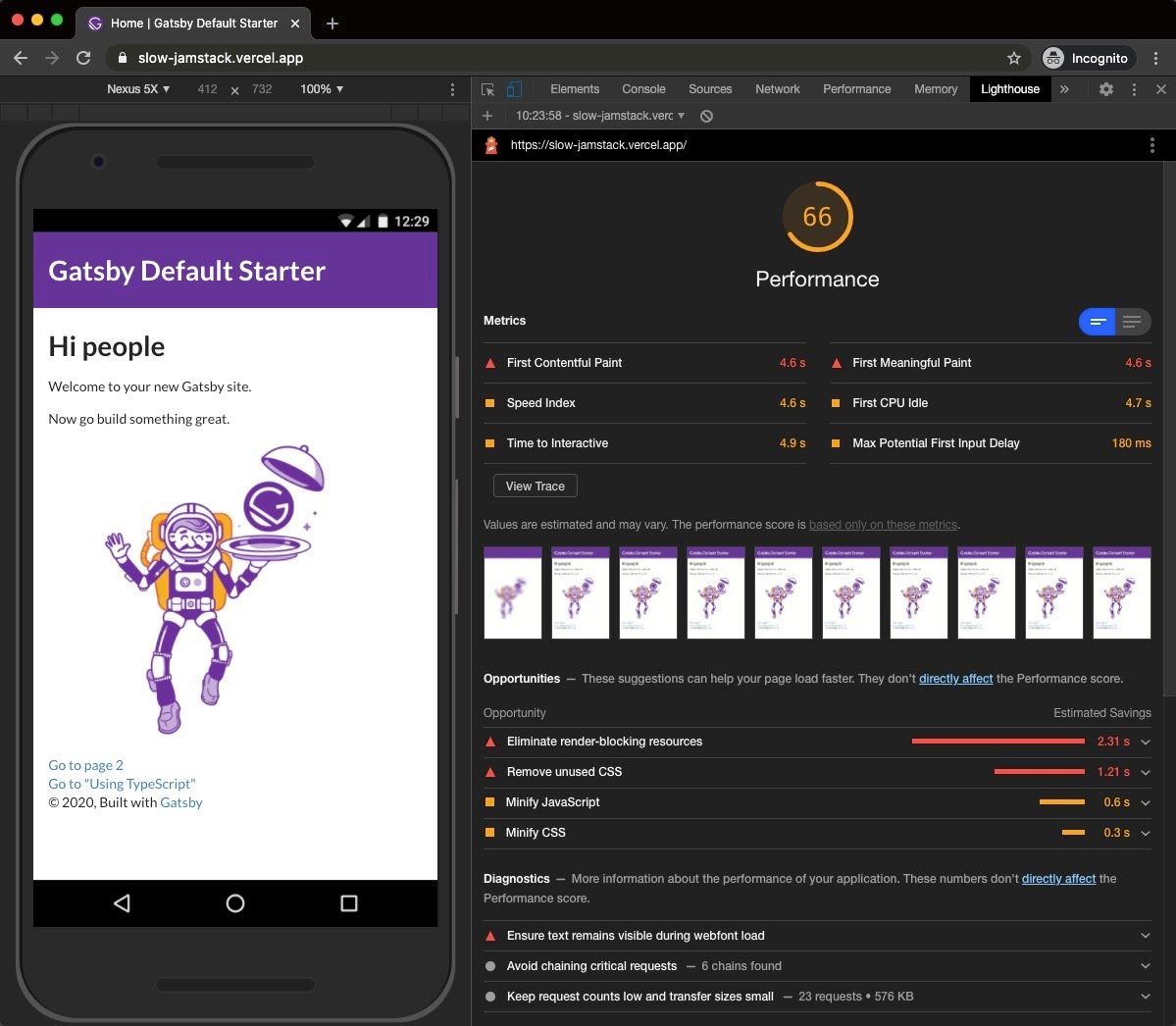

vercel --prodOK, let’s view the audit…

We have reduced the speed of the site down to a score of 66. Remember that we are not even using this framework at the moment. All we have done is load the files in the head and that reduced the performance score by one-third. Our Time to Interactive (TTI) jumped from a quick 1.9 seconds to a noticeable 4.9 seconds. And look at the possible savings we could get with from Lighthouse’s recommendations.

Slow marketing dependencies

Next, we are going to look at marketing tags and how these third-party scripts can affect performance. Let’s pretend we work with a marketing department and they want to start measuring traffic with Google Analytics. They also have a Facebook campaign and want to track it as well.

They give us the details of the scripts that we need to add to get everything working. First, for Google Analytics:

<script async src="https://www.googletagmanager.com/gtag/js?id=UA-4369823-4"></script>

<script

dangerouslySetInnerHTML={{ __html: `

window.dataLayer = window.dataLayer || [];

function gtag(){dataLayer.push(arguments);}

gtag('js', new Date());

gtag('config', 'UA-4369823-4');

`}}

/>Then for the Facebook campaign:

<script

dangerouslySetInnerHTML={{ __html: `

!function(f,b,e,v,n,t,s)

{if(f.fbq)return;n=f.fbq=function(){n.callMethod?

n.callMethod.apply(n,arguments):n.queue.push(arguments)};

if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0';

n.queue=[];t=b.createElement(e);t.async=!0;

t.src=v;s=b.getElementsByTagName(e)[0];

s.parentNode.insertBefore(t,s)}(window, document,'script',

'https://connect.facebook.net/en_US/fbevents.js');

fbq('init', '3180830148641968');

fbq('track', 'PageView');

`}}

/>

<noscript><img height="1" width="1" src="https://www.facebook.com/tr?id=3180830148641968&ev=PageView&noscript=1"/></noscript>We’ll place these scripts inside html.js, again in the section, before the closing tag.

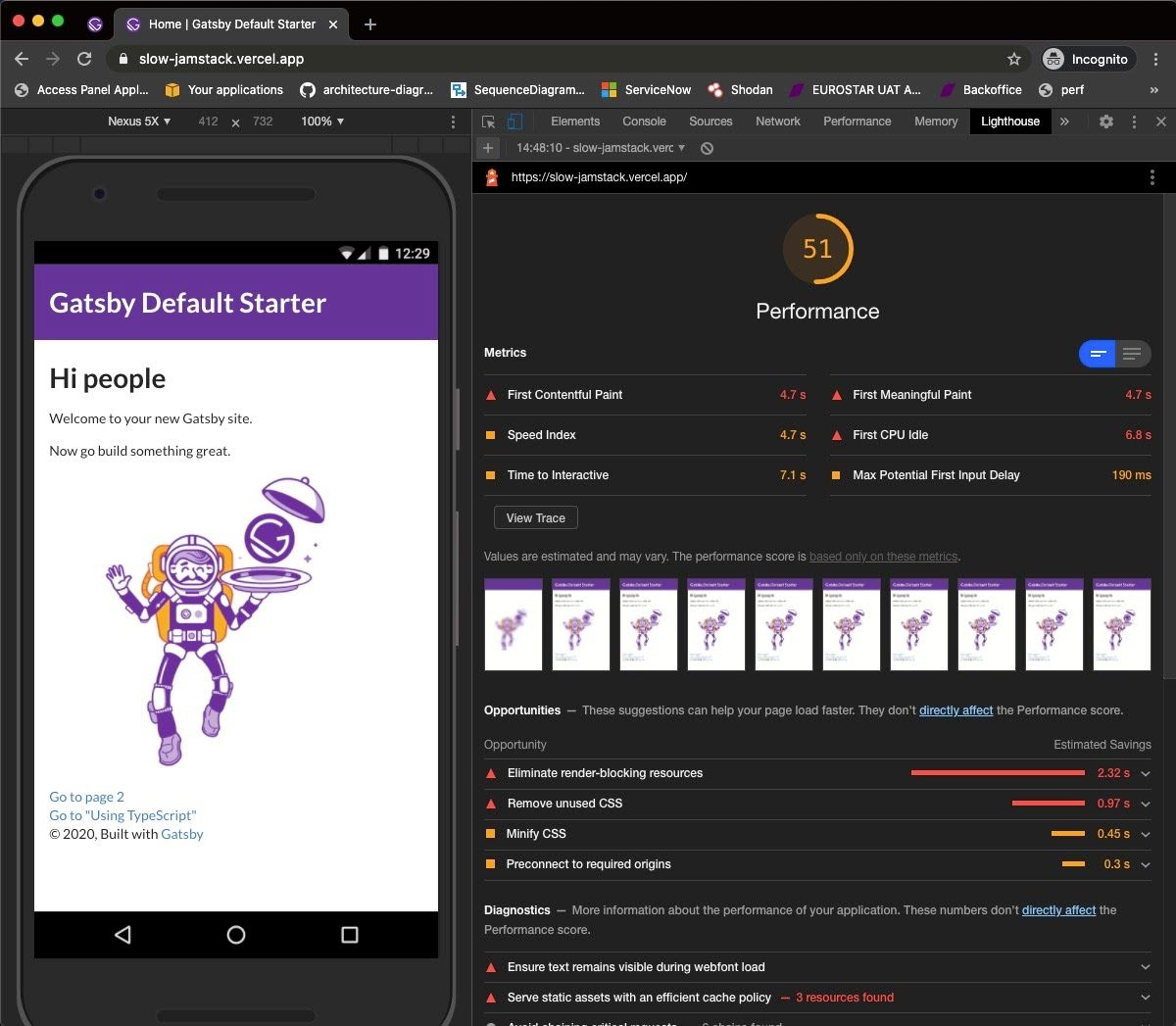

Just like before, let’s push to Vercel and re-run Lighthouse:

vercel --prod

Wow, the site is already down to 51 and all we’ve done is tack on one framework and a couple of measly scripts. Together, they/ve reduced the score by a whopping 49 points, nearly half of where we started.

Slow images

We haven’t added any images to the site yet but we know we absolutely would in a real-life scenario. We are going to add 100 images to the page. Sure, 100 is a lot for a single page but, then again, we know that images are often the biggest culprits of bloated web pages so we might as well let them shine.

We’ll make things a little worse by hot loading the images directly from https://placeimg.com instead of serving them on our own server.

Let’s crack open index.js and drop this code in, which will loop through 100 instances of images:

const IndexPage = () => {

const items = []

for(var i = 0; i < 100; i++) {

const url = `http://placeimg.com/640/360/any?=${i}`

items.push(<img key={i} alt={i} src={url} />)

}

return (

<Layout>

// ...

{items}

// ...

</Layout>

)

}The 100 images are all different and will all load as the page loads, thereby blocking the rendering. OK, let’s push to Vercel and see what’s up.

vercel --prod

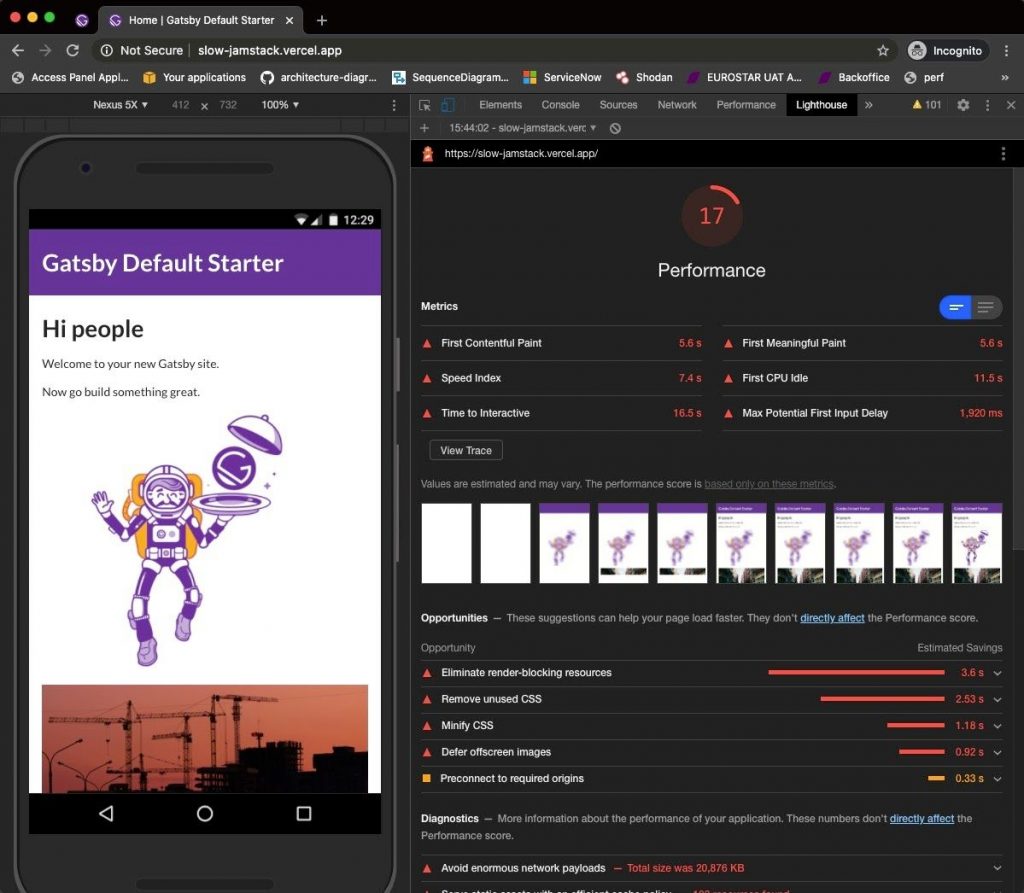

OK, we now have a very slow Jamstack site. The images are blocking the rendering of the page and the TTI is now a whopping 16.5 seconds. We have taken a very fast Jamstack site and dropped it to a Lighthouse score of 17 — a reduction of 83 points!

Now, you may be think that you would never make these poor decisions when building an app. But you are missing the point. Every choice we make has an impact on performance. It’s a balance and performance does not come free. Even on Jamstack sites.

Making Jamstack fast again

You have seen that we cannot ignore client-side performance when using Jamstack.

So why do people say that Jamstack is fast? Well, the main advantage of Jamstack — or using static site generators in general — is caching. Static files are cached on the edge reducing Time to First Byte (TTFB).

This is always going to be faster than going to a single-origin web server before generating the page. This is a great feature of Jamstack and gives you a fighting chance to create a page that can hit 100 in Lighthouse. (But, hey, as a side note, remember that great scores aren’t always indicative of an actual user experience.)

See, I told you we could make Jamstack slow! There are also many other things that can slow it down, but hopefully this drives home the point.

While we’re talking about performance, here are a few of my favorite performance articles on here at CSS-Tricks:

- The Complete Guide to Lazy Loading Images

- The Differing Perspectives on CSS-in-JS

- Third-Party Scripts

The post Make Jamstack Slow? Challenge Accepted. appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.