Rendering the WordPress philosophy in GraphQL

WordPress is a CMS that’s coded in PHP. But, even though PHP is the foundation, WordPress also holds a philosophy where user needs are prioritized over developer convenience. That philosophy establishes an implicit contract between the developers building WordPress themes and plugins, and the user managing a WordPress site.

GraphQL is an interface that retrieves data from—and can submit data to—the server. A GraphQL server can have its own opinionatedness in how it implements the GraphQL spec, as to prioritize some certain behavior over another.

Can the WordPress philosophy that depends on server-side architecture co-exist with a JavaScript-based query language that passes data via an API?

Let’s pick that question apart, and explain how the GraphQL API WordPress plugin I authored establishes a bridge between the two architectures.

You may be aware of WPGraphQL. The plugin GraphQL API for WordPress (or “GraphQL API” from now on) is a different GraphQL server for WordPress, with different features.

Reconciling the WordPress philosophy within the GraphQL service

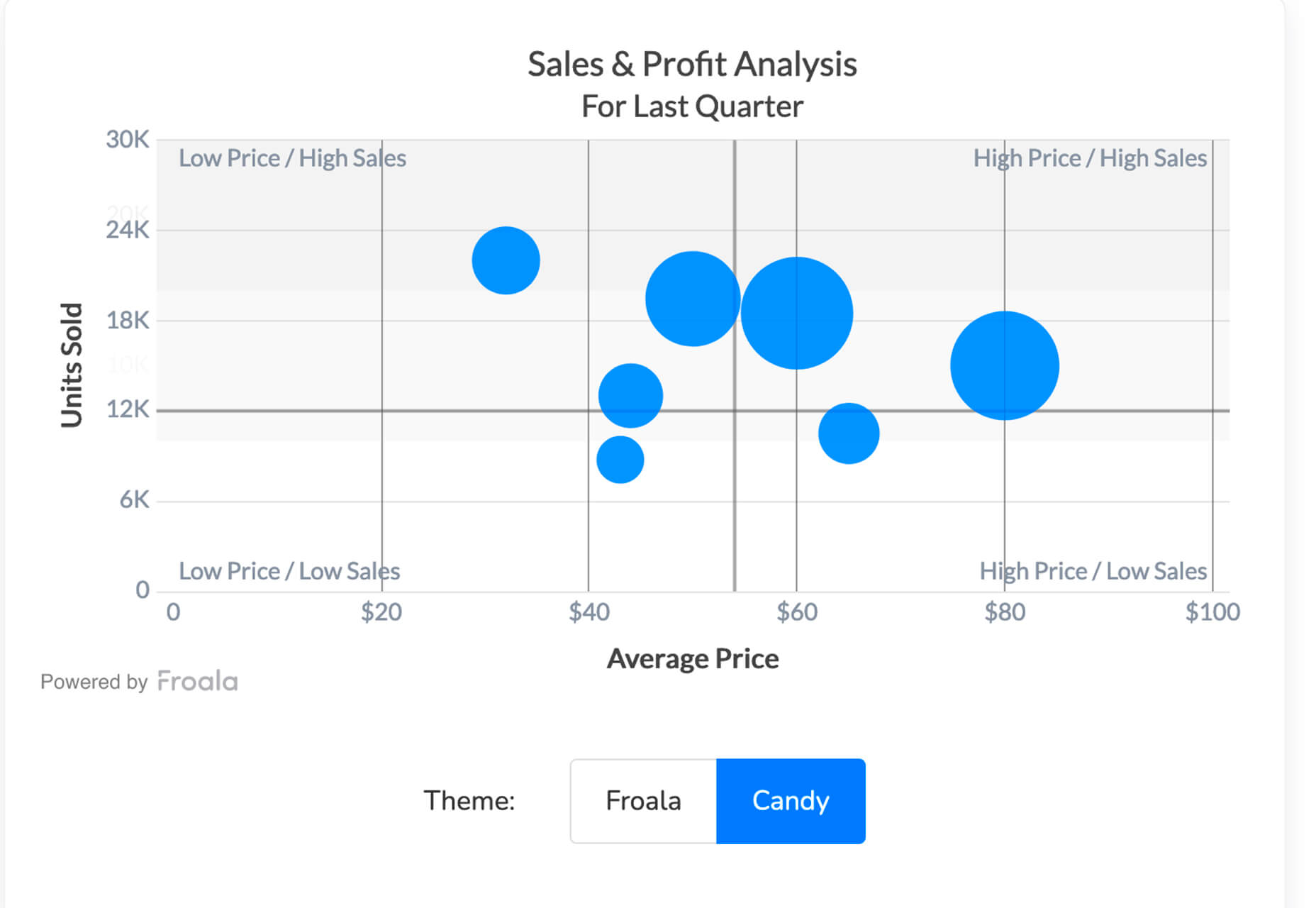

This table contains the expected behavior of a WordPress application or plugin, and how it can be interpreted by a GraphQL service running on WordPress:

| Category | WordPress app expected behavior | Interpretation for GraphQL service running on WordPress |

|---|---|---|

| Accessing data | Democratizing publishing: Any user (irrespective of having technical skills or not) must be able to use the software | Democratizing data access and publishing: Any user (irrespective of having technical skills or not) must be able to visualize and modify the GraphQL schema, and execute a GraphQL query |

| Extensibility | The application must be extensible through plugins | The GraphQL schema must be extensible through plugins |

| Dynamic behavior | The behavior of the application can be modified through hooks | The results from resolving a query can be modified through directives |

| Localization | The application must be localized, to be used by people from any region, speaking any language | The GraphQL schema must be localized, to be used by people from any region, speaking any language |

| User interfaces | Installing and operating functionality must be done through a user interface, resorting to code as little as possible | Adding new entities (types, fields, directives) to the GraphQL schema, configuring them, executing queries, and defining permissions to access the service must be done through a user interface, resorting to code as little as possible |

| Access control | Access to functionalities can be granted through user roles and permissions | Access to the GraphQL schema can be granted through user roles and permissions |

| Preventing conflicts | Developers do not know in advance who will use their plugins, or what configuration/environment those sites will run, meaning the plugin must be prepared for conflicts (such as having two plugins define the SMTP service), and attempt to prevent them, as much as possible | Developers do not know in advance who will access and modify the GraphQL schema, or what configuration/environment those sites will run, meaning the plugin must be prepared for conflicts (such as having two plugins with the same name for a type in the GraphQL schema), and attempt to prevent them, as much as possible |

Let’s see how the GraphQL API carries out these ideas.

Accessing data

Similar to REST, a GraphQL service must be coded through PHP functions. Who will do this, and how?

Altering the GraphQL schema through code

The GraphQL schema includes types, fields and directives. These are dealt with through resolvers, which are pieces of PHP code. Who should create these resolvers?

The best strategy is for the GraphQL API to already satisfy the basic GraphQL schema with all known entities in WordPress (including posts, users, comments, categories, and tags), and make it simple to introduce new resolvers, for instance for Custom Post Types (CPTs).

This is how the user entity is already provided by the plugin. The User type is provided through this code:

class UserTypeResolver extends AbstractTypeResolver

{

public function getTypeName(): string

{

return 'User';

}

public function getSchemaTypeDescription(): ?string

{

return __('Representation of a user', 'users');

}

public function getID(object $user)

{

return $user->ID;

}

public function getTypeDataLoaderClass(): string

{

return UserTypeDataLoader::class;

}

}The type resolver does not directly load the objects from the database, but instead delegates this task to a TypeDataLoader object (in the example above, from UserTypeDataLoader. This decoupling is to follow the SOLID principles, providing different entities to tackle different responsibilities, as to make the code maintainable, extensible and understandable.

Adding username, email and url fields to the User type is done via a FieldResolver object:

class UserFieldResolver extends AbstractDBDataFieldResolver

{

public static function getClassesToAttachTo(): array

{

return [

UserTypeResolver::class,

];

}

public static function getFieldNamesToResolve(): array

{

return [

'username',

'email',

'url',

];

}

public function getSchemaFieldDescription(

TypeResolverInterface $typeResolver,

string $fieldName

): ?string {

$descriptions = [

'username' => __("User's username handle", "graphql-api"),

'email' => __("User's email", "graphql-api"),

'url' => __("URL of the user's profile in the website", "graphql-api"),

];

return $descriptions[$fieldName];

}

public function getSchemaFieldType(

TypeResolverInterface $typeResolver,

string $fieldName

): ?string {

$types = [

'username' => SchemaDefinition::TYPE_STRING,

'email' => SchemaDefinition::TYPE_EMAIL,

'url' => SchemaDefinition::TYPE_URL,

];

return $types[$fieldName];

}

public function resolveValue(

TypeResolverInterface $typeResolver,

object $user,

string $fieldName,

array $fieldArgs = []

) {

switch ($fieldName) {

case 'username':

return $user->user_login;

case 'email':

return $user->user_email;

case 'url':

return get_author_posts_url($user->ID);

}

return null;

}

}As it can be observed, the definition of a field for the GraphQL schema, and its resolution, has been split into a multitude of functions:

getSchemaFieldDescriptiongetSchemaFieldTyperesolveValue

Other functions include:

getSchemaFieldArgs: to declare the field arguments (including their name, description, type, and if they are mandatory or not)isSchemaFieldResponseNonNullable: to indicate if a field is non-nullablegetImplementedInterfaceClasses: to define the resolvers for interfaces implemented by the fieldsresolveFieldTypeResolverClass: to define the type resolver when the field is a connectionresolveFieldMutationResolverClass: to define the resolver when the field executes mutations

This code is more legible than if all functionality is satisfied through a single function, or through a configuration array, thus making it easier to implement and maintain the resolvers.

Retrieving plugin or custom CPT data

What happens when a plugin has not integrated its data to the GraphQL schema by creating new type and field resolvers? Could the user then query data from this plugin through GraphQL? For instance, let’s say that WooCommerce has a CPT for products, but it does not introduce the corresponding Product type to the GraphQL schema. Is it possible to retrieve the product data?

Concerning CPT entities, their data can be fetched via type GenericCustomPost, which acts as a kind of wildcard, to encompass any custom post type installed in the site. The records are retrieved by querying Root.genericCustomPosts(customPostTypes: [cpt1, cpt2, ...]) (in this notation for fields, Root is the type, and genericCustomPosts is the field).

Then, to fetch the product data, corresponding to CPT with name "wc_product", we execute this query:

{

genericCustomPosts(customPostTypes: "[wc_product]") {

id

title

url

date

}

}However, all the available fields are only those ones present in every CPT entity: title, url, date, etc. If the CPT for a product has data for price, a corresponding field price is not available. wc_product refers to a CPT created by the WooCommerce plugin, so for that, either the WooCommerce or the website’s developers will have to implement the Product type, and define its own custom fields.

CPTs are often used to manage private data, which must not be exposed through the API. For this reason, the GraphQL API initially only exposes the Page type, and requires defining which other CPTs can have their data publicly queried:

Transitioning from REST to GraphQL via persisted queries

While GraphQL is provided as a plugin, WordPress has built-in support for REST, through the WP REST API. In some circumstances, developers working with the WP REST API may find it problematic to transition to GraphQL. For instance, consider these differences:

- A REST endpoint has its own URL, and can be queried via

GET, while GraphQL, normally operates through a single endpoint, queried viaPOSTonly - The REST endpoint can be cached on the server-side (when queried via

GET), while the GraphQL endpoint normally cannot

As a consequence, REST provides better out-of-the-box support for caching, making the application more performant and reducing the load on the server. GraphQL, instead, places more emphasis in caching on the client-side, as supported by the Apollo client.

After switching from REST to GraphQL, will the developer need to re-architect the application on the client-side, introducing the Apollo client just to introduce a layer of caching? That would be regrettable.

The “persisted queries” feature provides a solution for this situation. Persisted queries combine REST and GraphQL together, allowing us to:

- create queries using GraphQL, and

- publish the queries on their own URL, similar to REST endpoints.

The persisted query endpoint has the same behavior as a REST endpoint: it can be accessed via GET, and it can be cached server-side. But it was created using the GraphQL syntax, and the exposed data has no under/over fetching.

Extensibility

The architecture of the GraphQL API will define how easy it is to add our own extensions.

Decoupling type and field resolvers

The GraphQL API uses the Publish-subscribe pattern to have fields be “subscribed” to types.

Reappraising the field resolver from earlier on:

class UserFieldResolver extends AbstractDBDataFieldResolver

{

public static function getClassesToAttachTo(): array

{

return [UserTypeResolver::class];

}

public static function getFieldNamesToResolve(): array

{

return [

'username',

'email',

'url',

];

}

}The User type does not know in advance which fields it will satisfy, but these (username, email and url) are instead injected to the type by the field resolver.

This way, the GraphQL schema becomes easily extensible. By simply adding a field resolver, any plugin can add new fields to an existing type (such as WooCommerce adding a field for User.shippingAddress), or override how a field is resolved (such as redefining User.url to return the user’s website instead).

Code-first approach

Plugins must be able to extend the GraphQL schema. For instance, they could make available a new Product type, add an additional coauthors field on the Post type, provide a @sendEmail directive, or anything else.

To achieve this, the GraphQL API follows a code-first approach, in which the schema is generated from PHP code, on runtime.

The alternative approach, called SDL-first (Schema Definition Language), requires the schema be provided in advance, for instance, through some .gql file.

The main difference between these two approaches is that, in the code-first approach, the GraphQL schema is dynamic, adaptable to different users or applications. This suits WordPress, where a single site could power several applications (such as website and mobile app) and be customized for different clients. The GraphQL API makes this behavior explicit through the “custom endpoints” feature, which enables to create different endpoints, with access to different GraphQL schemas, for different users or applications.

To avoid performance hits, the schema is made static by caching it to disk or memory, and it is re-generated whenever a new plugin extending the schema is installed, or when the admin updates the settings.

Support for novel features

Another benefit of using the code-first approach is that it enables us to provide brand-new features that can be opted into, before these are supported by the GraphQL spec.

For instance, nested mutations have been requested for the spec but not yet approved. The GraphQL API complies with the spec, using types QueryRoot and MutationRoot to deal with queries and mutations respectively, as exposed in the standard schema. However, by enabling the opt-in “nested mutations” feature, the schema is transformed, and both queries and mutations will instead be handled by a single Root type, providing support for nested mutations.

Let’s see this novel feature in action. In this query, we first query the post through Root.post, then execute mutation Post.addComment on it and obtain the created comment object, and finally execute mutation Comment.reply on it and query some of its data (uncomment the first mutation to log the user in, as to be allowed to add comments):

# mutation {

# loginUser(

# usernameOrEmail:"test",

# password:"pass"

# ) {

# id

# name

# }

# }

mutation {

post(id:1459) {

id

title

addComment(comment:"That's really beautiful!") {

id

date

content

author {

id

name

}

reply(comment:"Yes, it is!") {

id

date

content

}

}

}

}Dynamic behavior

WordPress uses hooks (filters and actions) to modify behavior. Hooks are simple pieces of code that can override a value, or enable to execute a custom action, whenever triggered.

Is there an equivalent in GraphQL?

Directives to override functionality

Searching for a similar mechanism for GraphQL, I‘ve come to the conclusion that directives could be considered the equivalent to WordPress hooks to some extent: like a filter hook, a directive is a function that modifies the value of a field, thus augmenting some other functionality. For instance, let’s say we retrieve a list of post titles with this query:

query {

posts {

title

}

}…which produces this response:

{

"data": {

"posts": [

{

"title": "Scheduled by Leo"

},

{

"title": "COPE with WordPress: Post demo containing plenty of blocks"

},

{

"title": "A lovely tango, not with leo"

},

{

"title": "Hello world!"

},

]

}

}These results are in English. How can we translate them to Spanish? With a directive @translate applied on field title (implemented through this directive resolver), which gets the value of the field as an input, calls the Google Translate API to translate it, and has its result override the original input, as in this query:

query {

posts {

title @translate(from:"en", to"es")

}

}…which produces this response:

{

"data": {

"posts": [

{

"title": "Programado por Leo"

},

{

"title": "COPE con WordPress: publica una demostración que contiene muchos bloques"

},

{

"title": "Un tango lindo, no con leo"

},

{

"title": "¡Hola Mundo!"

}

]

}

}Please notice how directives are unconcerned with who the input is. In this case, it was a Post.title field, but it could’ve been Post.excerpt, Comment.content, or any other field of type String. Then, resolving fields and overriding their value is cleanly decoupled, and directives are always reusable.

Directives to connect to third parties

As WordPress keeps steadily becoming the OS of the web (currently powering 39% of all sites, more than any other software), it also progressively increases its interactions with external services (think of Stripe for payments, Slack for notifications, AWS S3 for hosting assets, and others).

As we‘ve seen above, directives can be used to override the response of a field. But where does the new value come from? It could come from some local function, but it could perfectly well also originate from some external service (as for directive @translate we’ve seen earlier on, which retrieves the new value from the Google Translate API).

For this reason, GraphQL API has decided to make it easy for directives to communicate with external APIs, enabling those services to transform the data from the WordPress site when executing a query, such as for:

- translation,

- image compression,

- sourcing through a CDN, and

- sending emails, SMS and Slack notifications.

As a matter of fact, GraphQL API has decided to make directives as powerful as possible, by making them low-level components in the server’s architecture, even having the query resolution itself be based on a directive pipeline. This grants directives the power to perform authorizations, validations, and modification of the response, among others.

Localization

GraphQL servers using the SDL-first approach find it difficult to localize the information in the schema (the corresponding issue for the spec was created more than four years ago, and still has no resolution).

Using the code-first approach, though, the GraphQL API can localize the descriptions in a straightforward manner, through the __('some text', 'domain') PHP function, and the localized strings will be retrieved from a POT file corresponding to the region and language selected in the WordPress admin.

For instance, as we saw earlier on, this code localizes the field descriptions:

class UserFieldResolver extends AbstractDBDataFieldResolver

{

public function getSchemaFieldDescription(

TypeResolverInterface $typeResolver,

string $fieldName

): ?string {

$descriptions = [

'username' => __("User's username handle", "graphql-api"),

'email' => __("User's email", "graphql-api"),

'url' => __("URL of the user's profile in the website", "graphql-api"),

];

return $descriptions[$fieldName];

}

}User interfaces

The GraphQL ecosystem is filled with open source tools to interact with the service, including many provide the same user-friendly experience expected in WordPress.

Visualizing the GraphQL schema is done with GraphQL Voyager:

This can prove particularly useful when creating our own CPTs, and checking out how and from where they can be accessed, and what data is exposed for them:

Executing the query against the GraphQL endpoint is done with GraphiQL:

However, this tool is not simple enough for everyone, since the user must have knowledge of the GraphQL query syntax. So, in addition, the GraphiQL Explorer is installed on top of it, as to compose the GraphQL query by clicking on fields:

Access control

WordPress provides different user roles (admin, editor, author, contributor and subscriber) to manage user permissions, and users can be logged-in the wp-admin (eg: the staff), logged-in the public-facing site (eg: clients), or not logged-in or have an account (any visitor). The GraphQL API must account for these, allowing to grant granular access to different users.

Granting access to the tools

The GraphQL API allows to configure who has access to the GraphiQL and Voyager clients to visualize the schema and execute queries against it:

- Only the admin?

- The staff?

- The clients?

- Openly accessible to everyone?

For security reasons, the plugin, by default, only provides access to the admin, and does not openly expose the service on the Internet.

In the images from the previous section, the GraphiQL and Voyager clients are available in the wp-admin, available to the admin user only. The admin user can grant access to users with other roles (editor, author, contributor) through the settings:

As to grant access to our clients, or anyone on the open Internet, we don’t want to give them access to the WordPress admin. Then, the settings enable to expose the tools under a new, public-facing URL (such as mywebsite.com/graphiql and mywebsite.com/graphql-interactive). Exposing these public URLs is an opt-in choice, explicitly set by the admin.

Granting access to the GraphQL schema

The WP REST API does not make it easy to customize who has access to some endpoint or field within an endpoint, since no user interface is provided and it must be accomplished through code.

The GraphQL API, instead, makes use of the metadata already available in the GraphQL schema to enable configuration of the service through a user interface (powered by the WordPress editor). As a result, non-technical users can also manage their APIs without touching a line of code.

Managing access control to the different fields (and directives) from the schema is accomplished by clicking on them and selecting, from a dropdown, which users (like those logged in or with specific capabilities) can access them.

Preventing conflicts

Namespacing helps avoid conflicts whenever two plugins use the same name for their types. For instance, if both WooCommerce and Easy Digital Downloads implement a type named Product, it would become ambiguous to execute a query to fetch products. Then, namespacing would transform the type names to WooCommerceProduct and EDDProduct, resolving the conflict.

The likelihood of such conflict arising, though, is not very high. So the best strategy is to have it disabled by default (as to keep the schema as simple as possible), and enable it only if needed.

If enabled, the GraphQL server automatically namespaces types using the corresponding PHP package name (for which all packages follow the PHP Standard Recommendation PSR-4). For instance, for this regular GraphQL schema:

…with namespacing enabled, Post becomes PoPSchema_Posts_Post, Comment becomes PoPSchema_Comments_Comment, and so on.

That’s all, folks

Both WordPress and GraphQL are captivating topics on their own, so I find the integration of WordPress and GraphQL greatly endearing. Having been at it for a few years now, I can say that designing the optimal way to have an old CMS manage content, and a new interface access it, is a challenge worth pursuing.

I could continue describing how the WordPress philosophy can influence the implementation of a GraphQL service running on WordPress, talking about it even for several hours, using plenty of material that I have not included in this write-up. But I need to stop… So I’ll stop now.

I hope this article has managed to provide a good overview of the whys and hows for satisfying the WordPress philosophy in GraphQL, as done by plugin GraphQL API for WordPress.

The post Rendering the WordPress philosophy in GraphQL appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.