The Importance of Regularly Revising your Company’s Data Protocols

Business owners have access to many tools that are vital to their success, data being one of them. Data can be precious to a business that knows how to collect, analyze, and use it.

Staying on top of your company’s data protocols is also essential. You must continuously update and adapt your rules and procedures for handling data to your business’s evolution. Doing so will give your company an advantage in various ways. Let’s explore this further.

Provide Better Security for Company and Customer Data

Regularly revising your company’s data protocols is critical to ensure you always provide the best security for your business and customer data. Outdated data protocols can lead to serious data breaches and security issues.

For example, when employees aren’t following your most recent procedures to secure data, they’re more vulnerable to scams like phishing.

Phishing is a cybercrime that involves being contacted by someone who seems legitimate via email, phone, or text message. They aim to get you to give them confidential information like business account numbers or your customers’ personal information.

If you aren’t following the most up-to-date data security protocols, you open yourself, your customers, and your company up to phishing and other scams that could cause serious harm.

Leverage Modern Data Analytics Tools

When you consistently review your company’s data protocols, you may find that you need better tools at some point. You’ll have opportunities to bring in modern data analytics tools that can take your collection and analysis processes to the next level.

For example, analytic process automation (APA) can drastically improve the efficiency of your collection process and how you use the data you gather. However, as powerful as data can be for a business, too much of it can leave teams unsure of how to proceed.

APA technology can take those large datasets, analyze them for prescriptive and predictive insights, translate those insights into tangible actions, and share them with the appropriate team members.

Neglecting to revise policies and procedures for your data won’t allow you to leverage modern data analytics tools that can collect and house information critical to the success of your team and company.

Standardize Your Data Collection Process

One of the biggest mistakes companies make is not having a standardized process for data collection. They just implement analytics tools and collect as much data as possible without any real direction after gathering it.

As a result, these companies aren’t collecting the data they need, nor are they putting whatever they gather to good use, keeping them a step behind their biggest competition.

Regularly revising data protocols can help you refine your process for collecting data so that it’s done in an organized, productive way.

Improve Your Use of Data

In addition to standardizing your collection process, bettering your company’s data protocols can help you improve your use of data. Collecting valuable data is only part of the responsibility.

The other, and maybe the most critical part, is how you analyze and use that data to better your business. When you review and revise your data protocols, you should be looking at the effectiveness of your analysis process as well as your utilization procedures.

Doing this often allows you to constantly refine:

- How you pull meaningful insights from the information you collect;

- How you turn those insights into tangible actions that move your business forward.

Enhance Your Marketing and Sales Campaigns

Two departments that rely heavily on data are marketing and sales. Both departments use data to understand customers better and create campaigns tailored to who they are and how they behave.

The more personalized your marketing and sales campaigns are, the more likely they will resonate with your customers and drive conversions. However, the continued effectiveness of your marketing and sales campaigns relies on revising your company’s data protocols often.

Managing and adjusting your data protocols ensures you always collect the most accurate, useful data. It ensures you’re studying it effectively. Revising your protocols also formalizes how you use data in marketing and sales, so the experience is consistent for your customers.

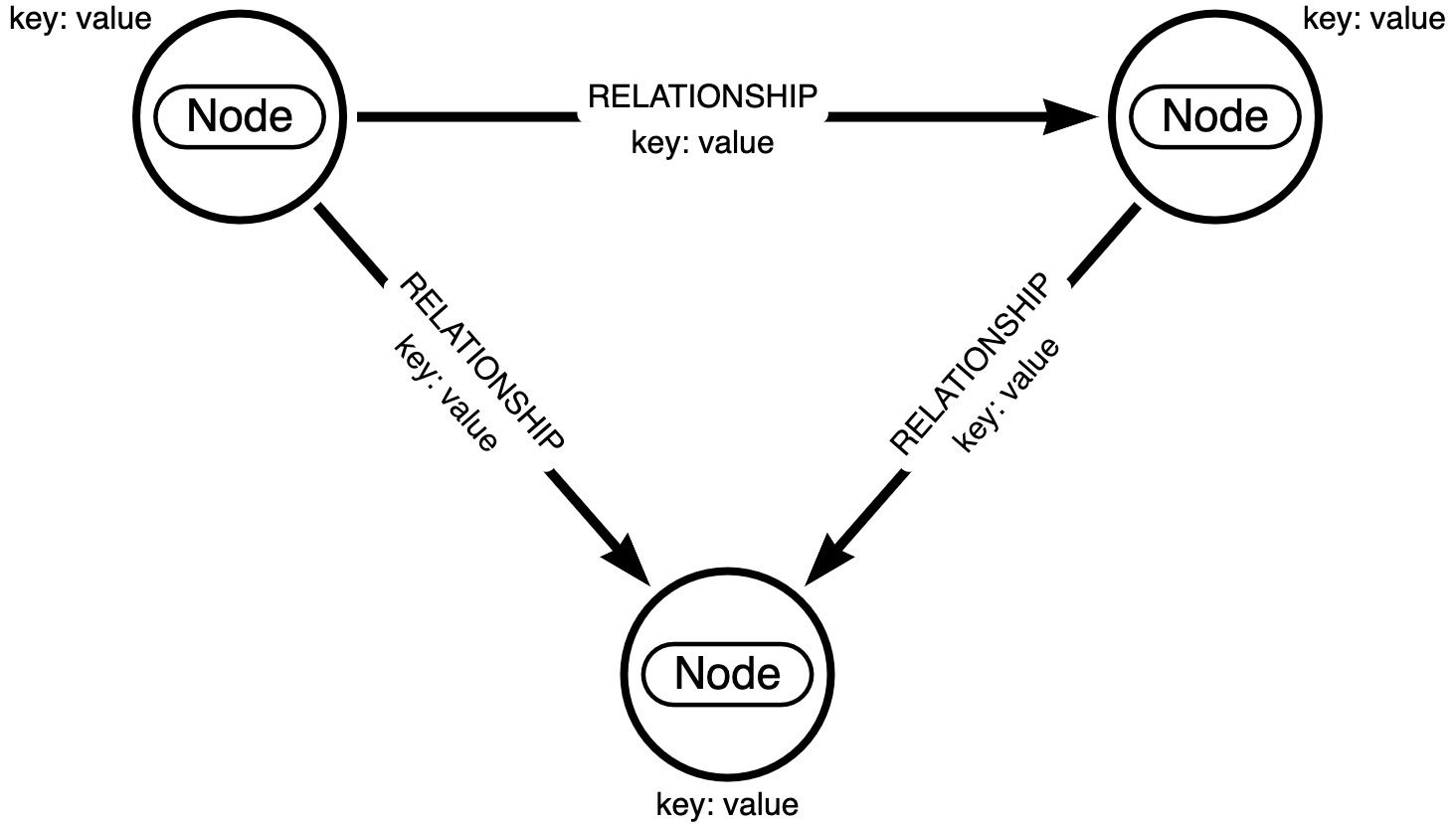

Define Guidelines for Data Classification

How you classify your data is essential for all of your departments. If each department organizes data in different ways, chaos and confusion are pretty much guaranteed. Silos will form, and your teams’ won’t share data effectively, let alone understand and use it. And the customer experience will suffer because of it.

Reviewing your data protocols ensures everyone in your company follows the most up-to-date data classification guidelines. No matter their department, your employees will be on the same page about where data belongs and why.

Create a More Cohesive and Collaborative Team

One of the most significant benefits of regularly revising your data protocols is creating a more cohesive and collaborative team rooted in digital culture and data.

Every department uses data in some way. But you don’t want each person to handle data in their own way because it’ll lead to a disjointed workflow and inefficient data collection, analysis, and use.

On the other hand, when you give your team data best practices to abide by, you can develop a more cohesive operation. Consistently revising your protocols will ensure your team knows the following:

- How to identify the most valuable data to collect;

- What to do once they gather data;

- Best practices for examining data for meaningful insights;

- Whom to contact for help with data;

- Steps to take to turn insights into actions.

Keep your team cohesive, collaborative, and productive by establishing guidelines for data use in your company and constantly adjusting them to better fit how your team works.

Stay in Line With Laws and Regulations

Data collection and analysis are becoming more conventional practices in the business world. However, that doesn’t mean companies can just collect whatever data they want whenever they want.

There are laws and regulations that dictate how companies can collect data and what kind of information they can gather about their customers. Neglecting these laws and regulations can cost you financially and stain your business reputation.

Regularly revising your company’s data protocols can help you stay in line with laws and regulations. This ensures you’re collecting and using data ethically in your company, which is especially important if you’re in a high-risk or highly regulated industry.

Conclusion

Regularly revising your company’s data protocols is crucial for many reasons. Data will become an even more powerful tool for businesses as time goes on. So, make sure you’re adjusting your protocols consistently to ensure data’s influence on your business is meaningful.

The post The Importance of Regularly Revising your Company’s Data Protocols appeared first on noupe.