This article is a sponsored by STUDIO

For years, reports have been warning of technology taking away jobs, particularly in fields like food preparation, truck driving, and warehouse operations. These jobs are often considered “blue-collar” and involve repetitive manual labor. However, many in the creative community believed their careers were immune to automation. After all, a designer’s craft is anything but monotonous. While computers can crunch numbers quickly, how are they going to be able to design?

Then something surprising happened: Artificial intelligence (AI) made inroads into the design. In product design, Mattel is using AI technology for product design. In interior design, designers are creating mockups with AI that can detect floors, walls, and furniture and change them. In graphic design, Nestle used an AI-retouched Vermeer painting in marketing to sell one of its yogurt brands. Advertising agency BBDO is experimenting with producing materials with Stable Diffusion.

But how about fields with a distinctly defined medium, like web design? Turning the focus to web design, this article will provide a brief history of AI in web design, explore its current implications for creativity, and offer suggestions for how web designers can stay ahead of the curve.

The Road Leading Here

AI’s capabilities outlined are a result of development dating fifty years ago and have rapidly accelerated in recent years with advanced computation models, additional training data that goes into improving the models, and improved computing power to run the models.

In 1950, Alan Turing, known as the Father of modern computer science, asked the famous question: Can machines think? Research began by attempting to teach machines human knowledge with declarative rules, which eventually proved to be difficult given the many implicit rules in our daily lives.

In the 90s, the above knowledge-feeding approach transitioned to a data-driven approach. Scientists began creating programs for computers to learn from large amounts of data with neural network architectures, much as how a human brain functions. This shift accelerated progress, producing breakthroughs, including IBM’s Deep Blue beating the world champion at chess in 1997, and Google Brain’s deep neural network learning to discover and categorize objects.

Recently, advancements in neural network model sophistication, data availability, and computing power further accelerated machines’ capabilities. In 2014, Ian Goodfellow created the first generative adversarial neural network, which allowed machines to generate new data with the same statistics as the original data set. This discovery readies the stage for AI models like DALL·E 2, StableDiffusion, and MidJourney in 2022, which demonstrate original creations abilities outlined at the beginning of the article.

Next, we will explore the implications of these technologies for web designers.

Today’s Implications

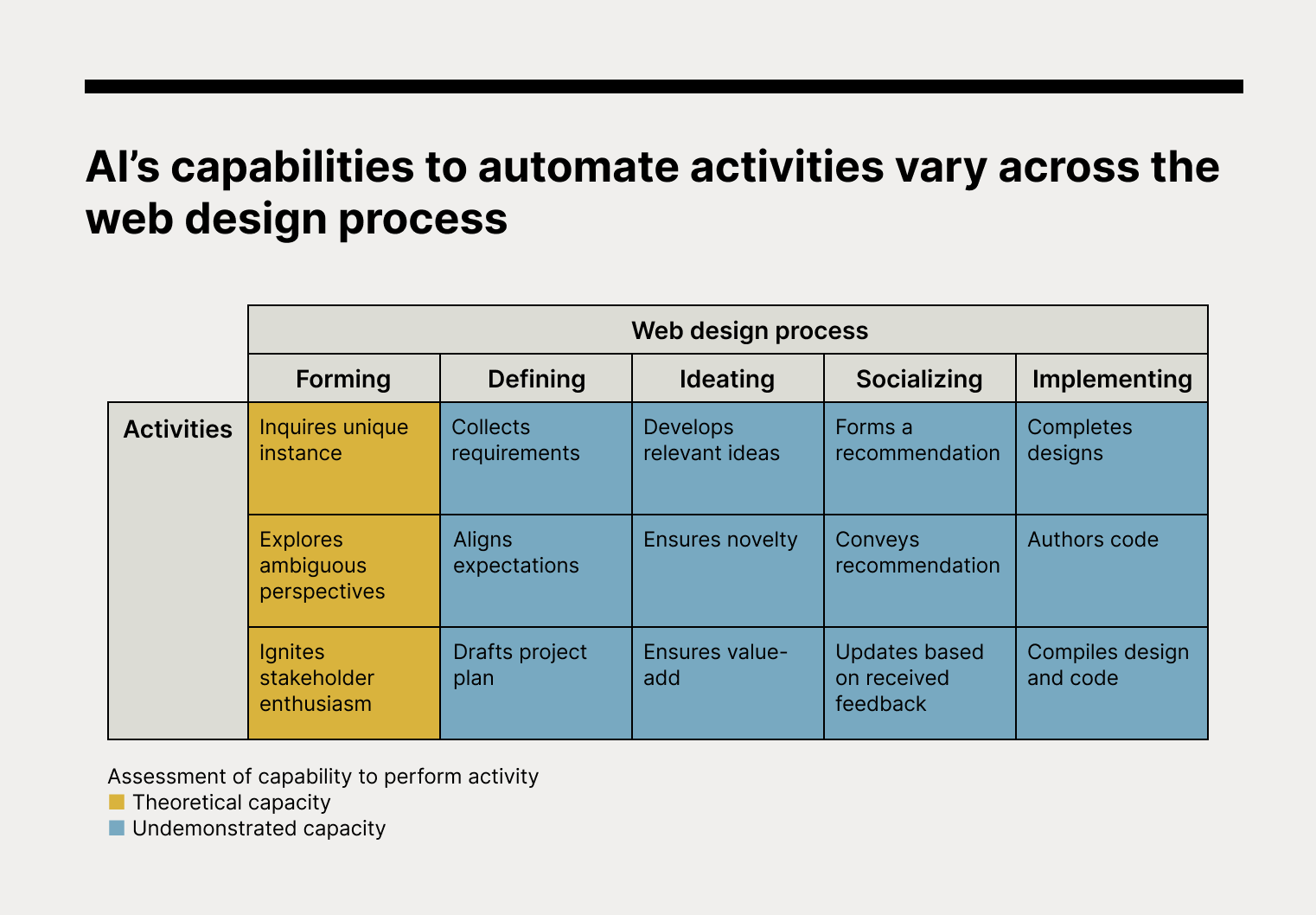

Today, designers and clients typically go through six stages together before arriving at a new website. The term “client” is used loosely and can refer to inter-departmental teams working on in-house websites or the individual responsible for building a website on their own.

-

Forming

The designer works with the client to assess the context for a website design.

-

Defining

The designer extracts the complete set of requirements and drafts a project plan to meet expectations.

-

Ideating

The designer generates tailored ideas meeting the requirements.

-

Socializing

The designer presents the ideas to the client and supports in choosing one to proceed.

-

Implementing

The designer creates high-fidelity designs, which are then turned into code for deploying.

In order to better understand the impact of AI, we will break down the six stages of the web design process and examine the specific activities involved. Using the latest academic research and deployment examples, we will assess AI’s theoretical capabilities to perform activities in each stage. Our team will also create a webpage with AI technologies that everyone has access to today and compare it with the manual process for a practical perspective.

Forming

Forming calls for the designer to inquire about the unique instance, explore ambiguous perspectives, and ignite stakeholder enthusiasm.

-

Inquires unique instance: Undemonstrated capacity.

When taking on a new client, it’s crucial to evaluate their unique context and determine whether web design is the right solution to meet their business goals. However, current AI models often struggle with analyzing subjects that aren’t included in their training data sets. With it being impossible to pre-collect comprehensive data on every business, it’s clear that current AI models lack the ability to be inquisitive about each unique instance.

-

Explores ambiguous perspectives: Undemonstrated capacity.

At the beginning of the engagement, it is essential to consider multiple perspectives and use that information to guide exploration. For example, a designer might learn about the emotional roots of a client’s brand and use that knowledge to inform the website redesign. While AI models from institutions like MIT and Microsoft have shown early promise in recognizing abstract concepts and understanding emotions, they still lack the ability to fully adopt human perspectives. As a recent article from Harvard Business Review pointed out, empathy is still a key missing ingredient in today’s AI models.

-

Ignites stakeholder enthusiasm: Undemonstrated capacity.

In order to set up a project for success, both the client and designer must be enthusiastic and committed to seeing it through to completion. While AI has shown potential in creating copy that resonates with consumers and motivates them to make a purchase, it remains unproven when it comes to sparking motivation for long-term business engagements that require sustained effort and input.

The AI Experiment

Designers

In preparation for a product launch, our designers evaluated the different launch approaches and decided to build a landing page. They intuitively decided to focus on nostalgic emotions because of the emotional connection many designers have with their tools. The team worked closely with product managers to get them excited.

AI

For the purpose of this article, the design team also attempted to use AI for the same tasks. General conversational models like ChatGPT were unable to diagnose a website’s necessity for us and only offered generic advice. When it came to generating early directions, models mostly produced results that skewed towards functional differentiation, failing to consider empathy and emotions that could make designers and stakeholders enthusiastic.

Defining

Defining calls for the designer to collect detailed requirements, set expectations, and draft a project plan.

-

Collects requirements: Theoretical capacity

To ensure that all detailed requirements are collected, clients should be encouraged to verbalize their needs in terms of technical specifications, page count, and launch dates. AI models are now capable of performing these requirement-collection tasks. Thanks to examples of human exchanges fed to the models, Natural Language Processing (NLP) and Natural Language Understanding (NLU) have enabled AI to parse, understand, and respond to inputs. One of the latest models, OpenAI’s ChatGPT, can ask for additional context, answer follow-up questions, and reject inappropriate requests. AI models are already being deployed for customer service and have shown positive results in terms of trust and satisfaction.

-

Aligns expectations: Theoretical capacity

The client and designer should align on criteria such as acceptance standards and future communication schedules. To help facilitate this alignment, AI models are now capable of handling negotiations autonomously. In academia, research from Meta (formerly Facebook) shows how AI models can use simulation and prediction to complete negotiations on their own. In the business world, companies like Pactum are helping global retailers secure the best possible terms in B2B purchases with their proprietary AI models.

-

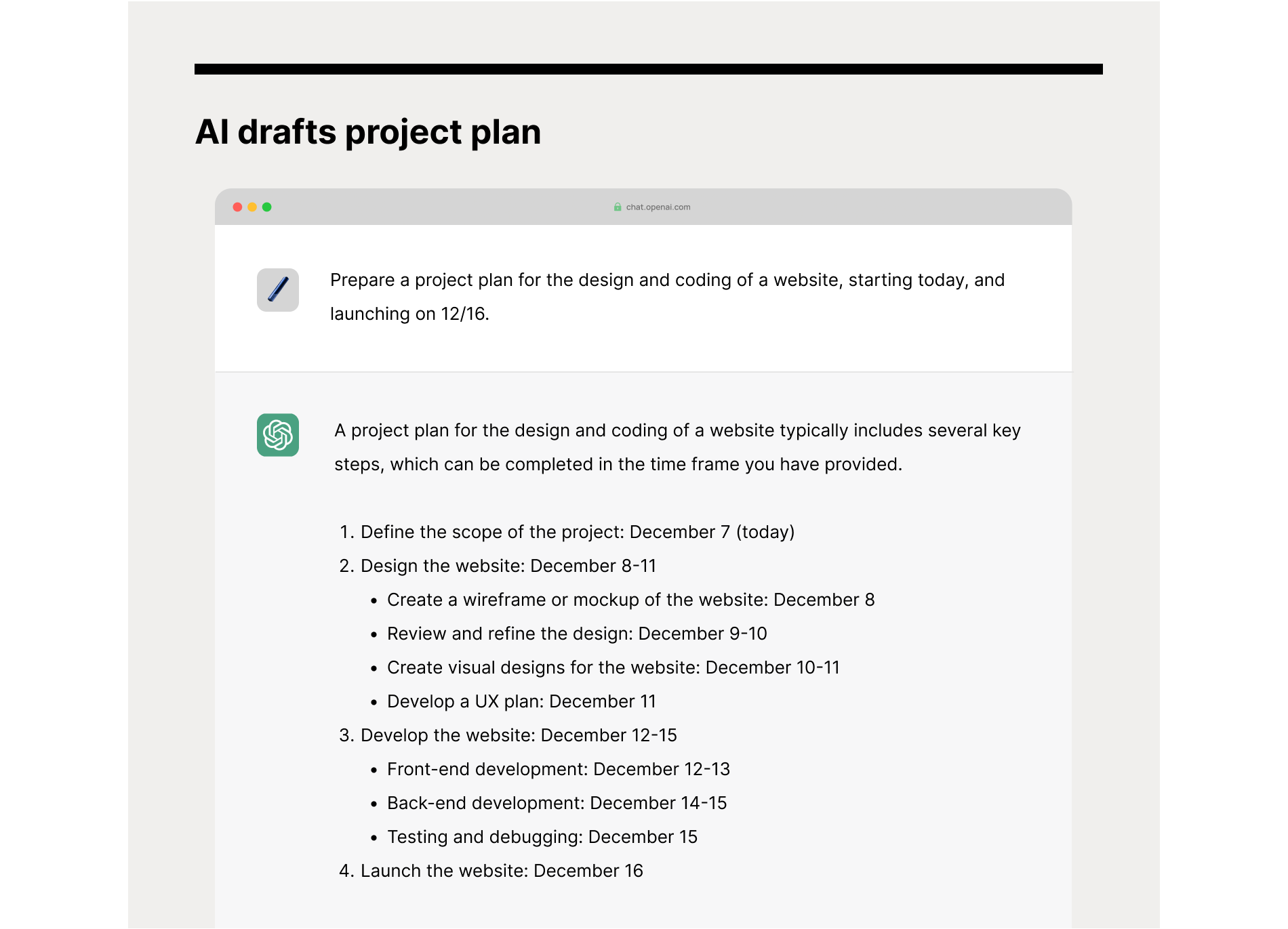

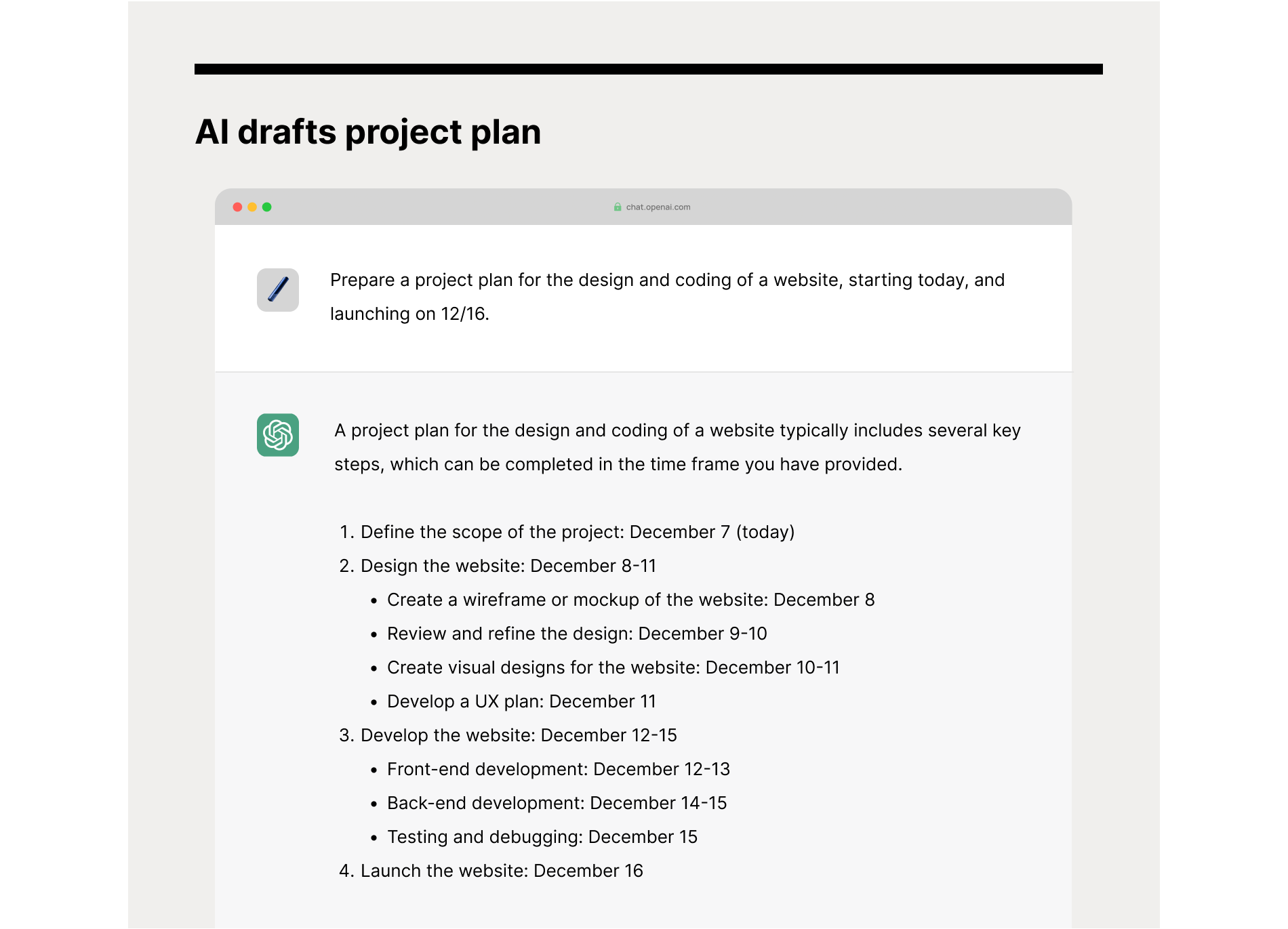

Drafts project plan: Theoretical capacity

To ensure that a project stays on track, it’s important for the designer to establish milestones and deadlines. AI models are now capable of estimating task durations and sequencing activities in a project. In 2017, researchers demonstrated the use of a machine learning algorithm called Support Vector Machine for accurate forecasting of project timelines. Further research has also established the use of Artificial Neural Networks for defining task relationships and creating work breakdown structure (WBS) charts.

The AI Experiment

Designers

Designers collected requirements from the product team using a tried-and-true questionnaire. The landing page needs to match the product launch date, so the teams chatted about the scope. After some frustrating back-and-forth where both teams accused the other of not having a clue, they finally came to a mutual agreement on a project plan.

AI

Designers tried the same with ChatGPT. Designers have AI role-play as the design team to collect requirements from the product team. AI performed admirably, even inspiring the team to add new items to their questionnaire. Designers then asked it to create a project plan while feeding it the same concerns received from the product team. Though the designers did not expect to use the produced schedule as-is, as factors like the team’s current workload were not considered, they still thought it performed reasonably well.

Ideating

Ideating calls for the designer to develop ideas relevant to previously defined criteria, ensure they are novel to contribute to the client’s differentiation and ensure they are of value to support the client’s business outcomes.

-

Develops relevant ideas: Theoretical capacity

Ideas generated should align with consensus from earlier stages. Today’s AI models, like OpenAI’s DALL·E 2, can generate output that aligns with prompt criteria by learning the relationship between prompts and outputs through training data. This allows the AI to produce design ideas, including for UI design, that reflect the prompt criteria.

-

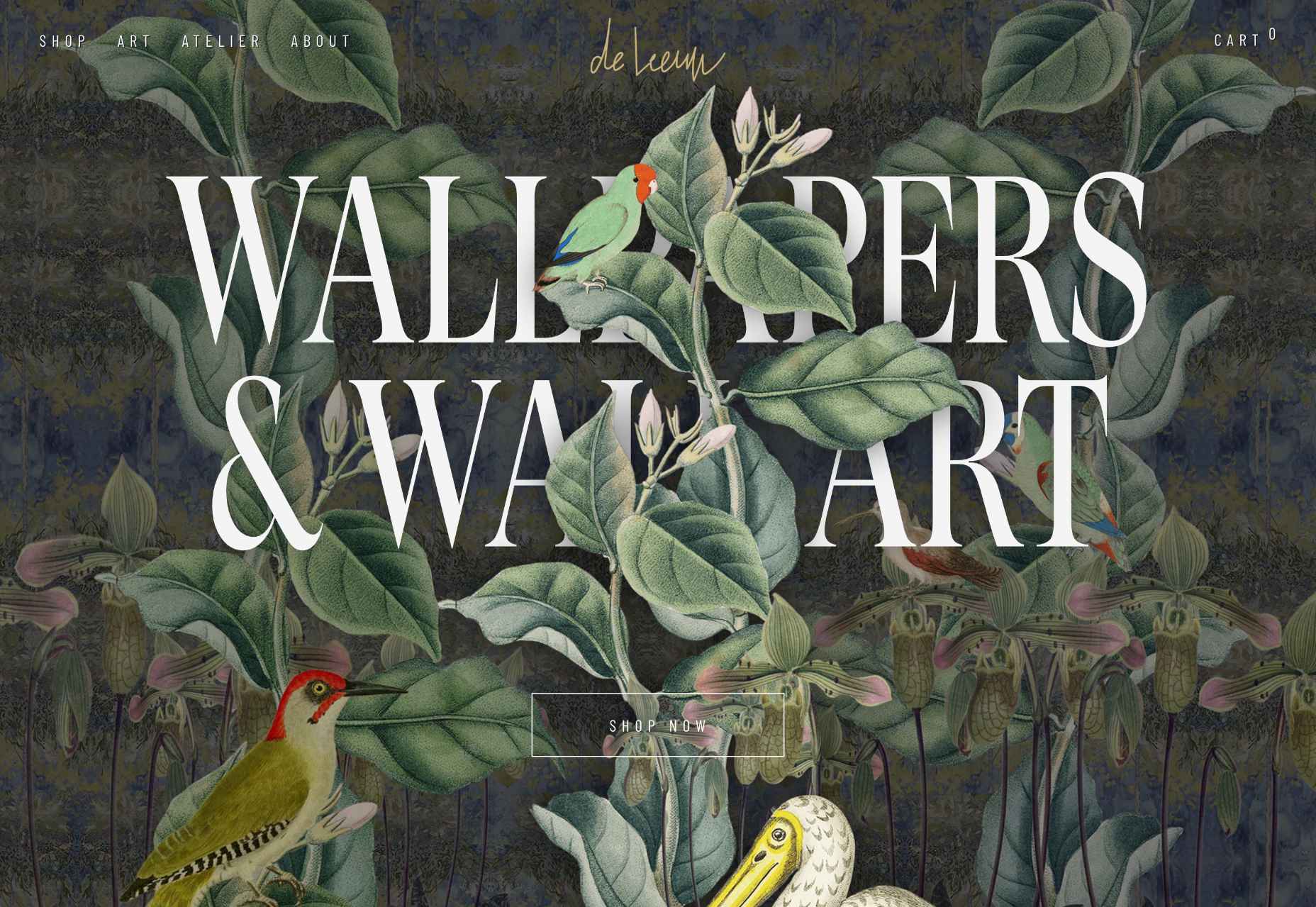

Ensures novelty: Theoretical capacity

Ideas generated should offer fresh impressions and not a mere copy of existing executions. Today’s AI models can generate novel output using diffusion techniques. By scrambling and reassembling learned data in new ways, AI can create new data that resembles the learned data. This allows the AI to combine aspects of what it has learned in order to generate new ideas, similar to how humans combine known concepts to create new ideas. Imagen Video by Google, Make-a-Video by Meta, MidJourney, and Stable Diffusion are all examples of how AI models can generate completely new output.

-

Ensures value-add: Theoretical capacity

Ideas generated should offer value-add to the client. AI models can compete with or surpass humans in this area thanks to their ability to learn from large amounts of data and their unmatched computational power for identifying patterns. This makes AI a strong candidate for inspiring, deriving, and supercharging ideas, providing value that may be difficult for humans to achieve on their own alone.

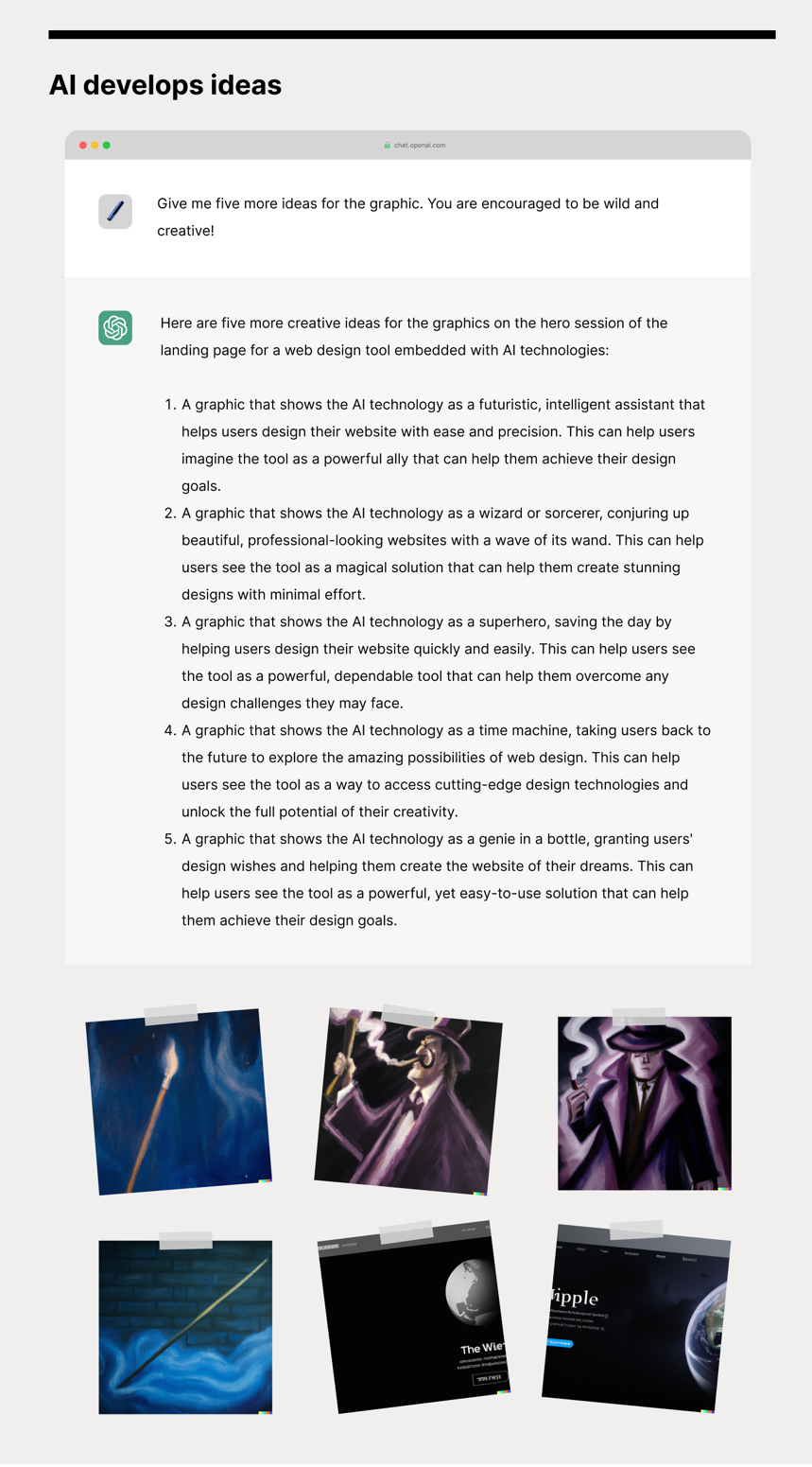

The AI Experiment

Designers

Designers brainstormed a couple of ideas for the hero based on “nostalgia” directions discussed in earlier stages.

AI

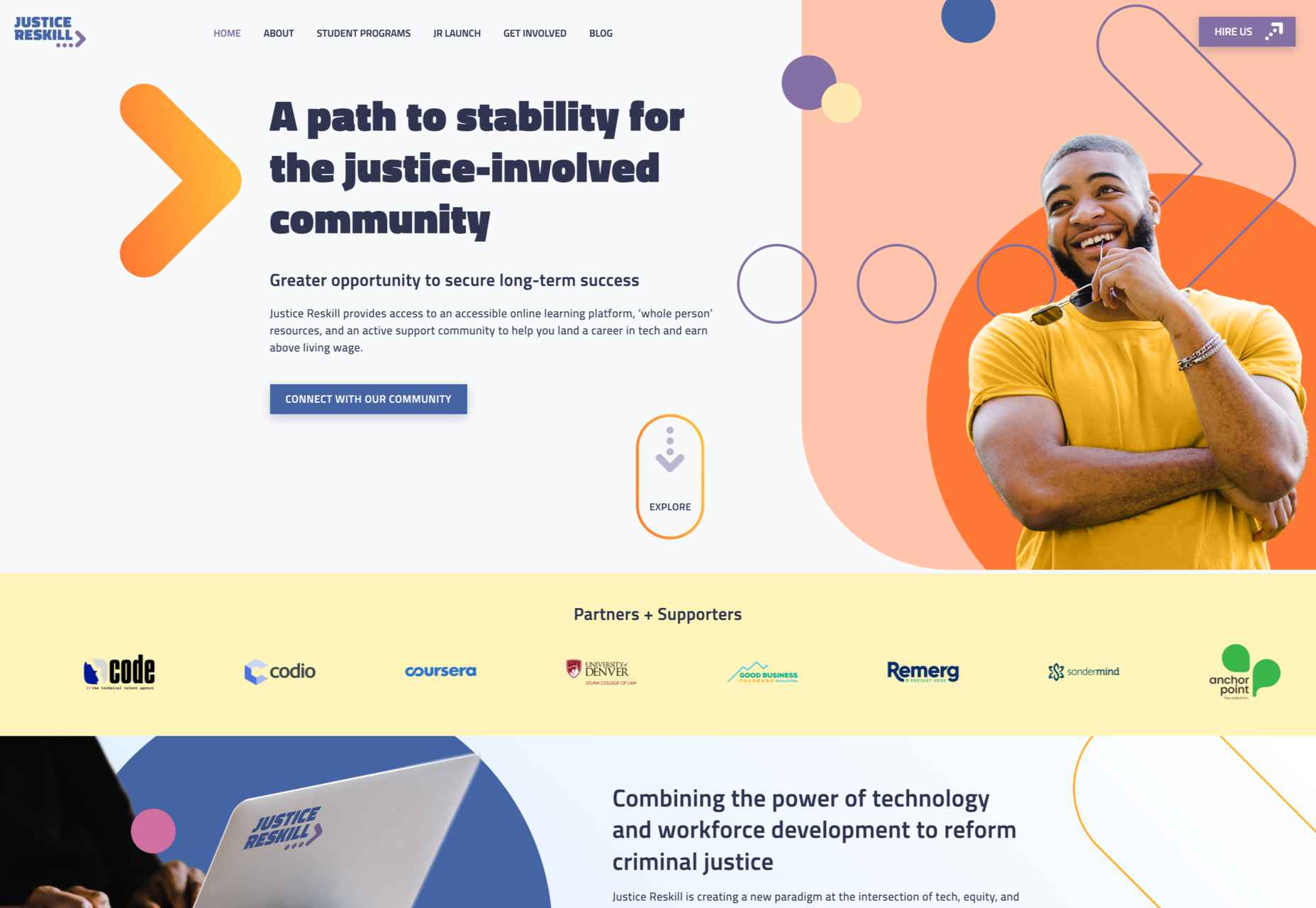

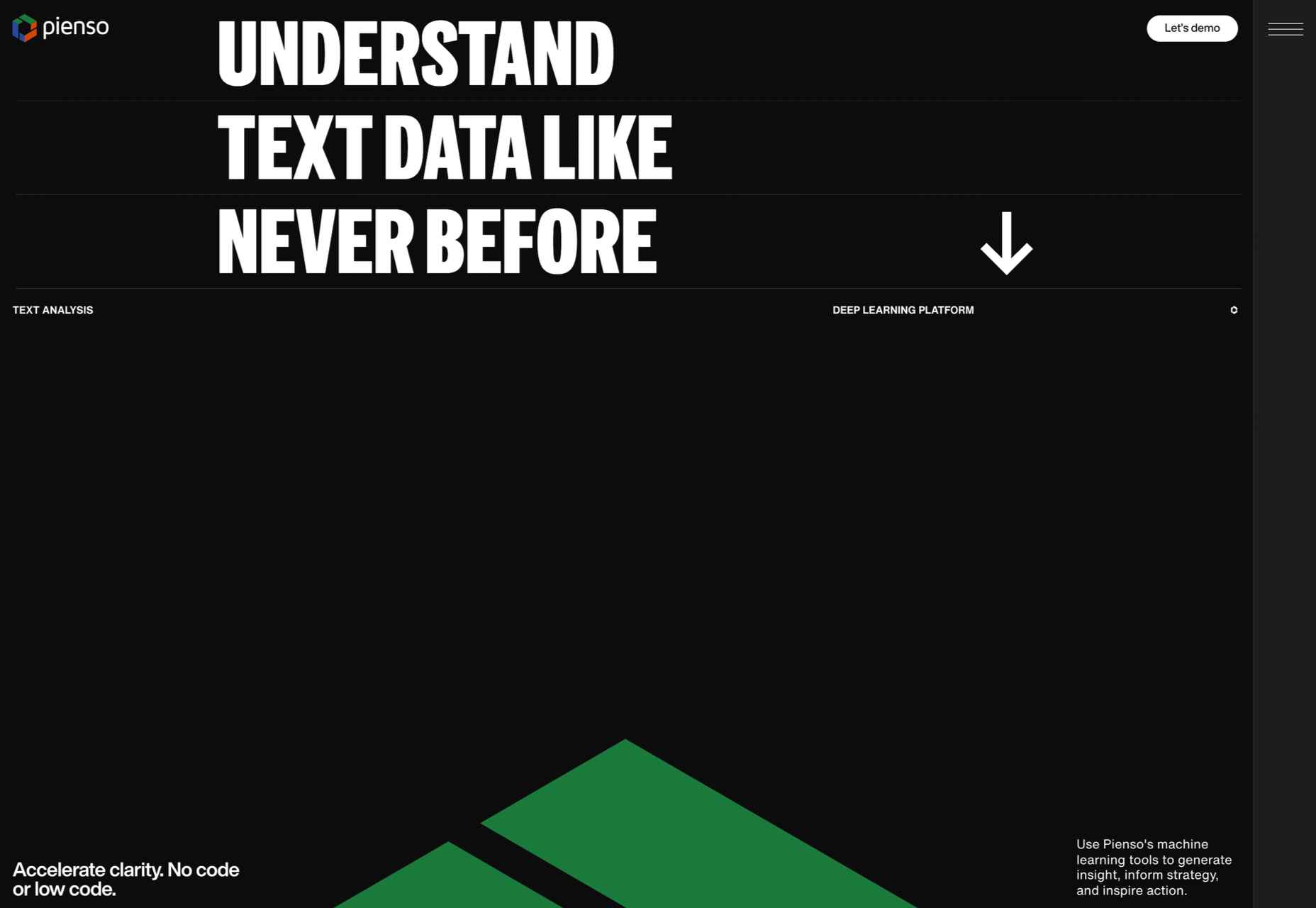

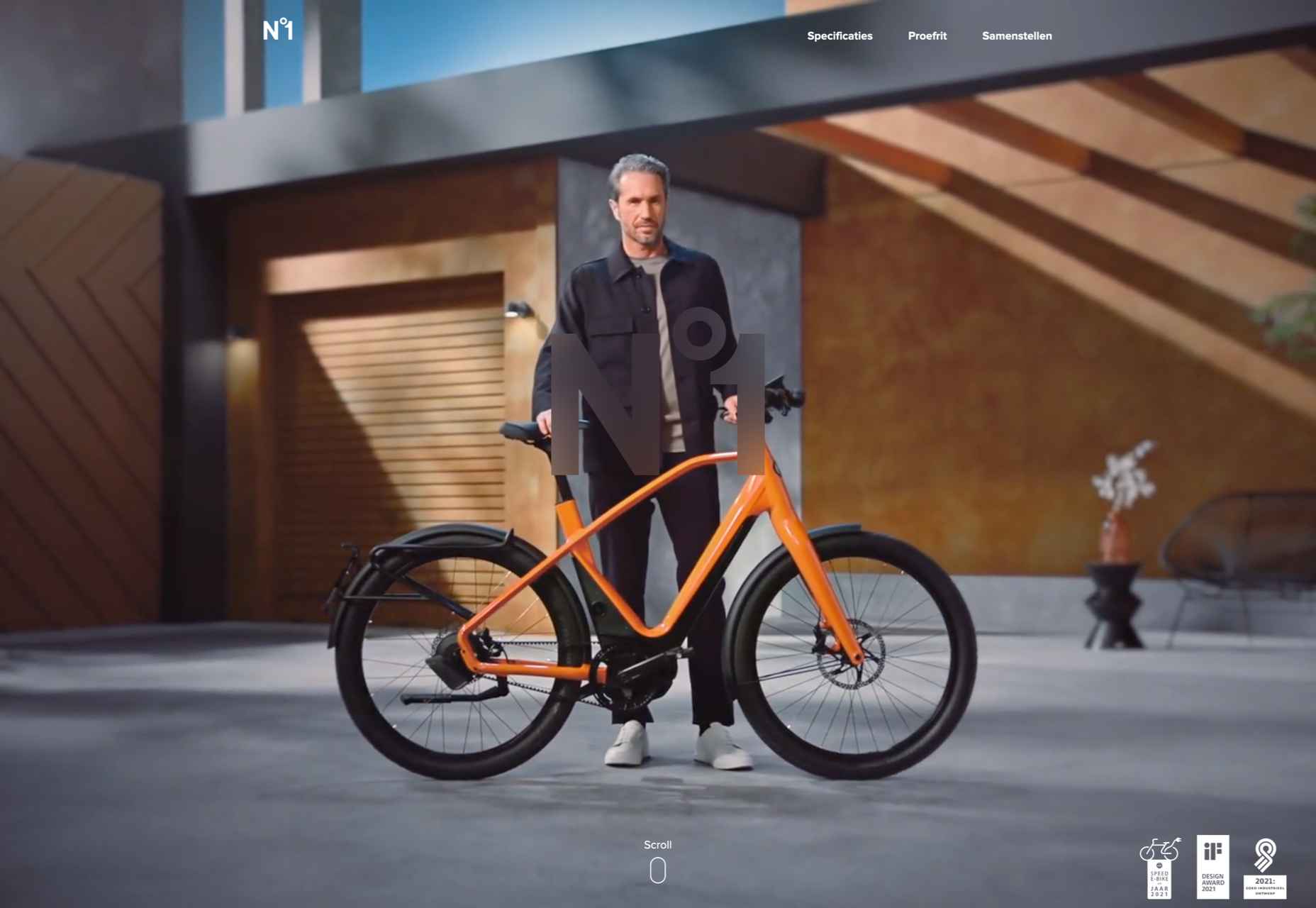

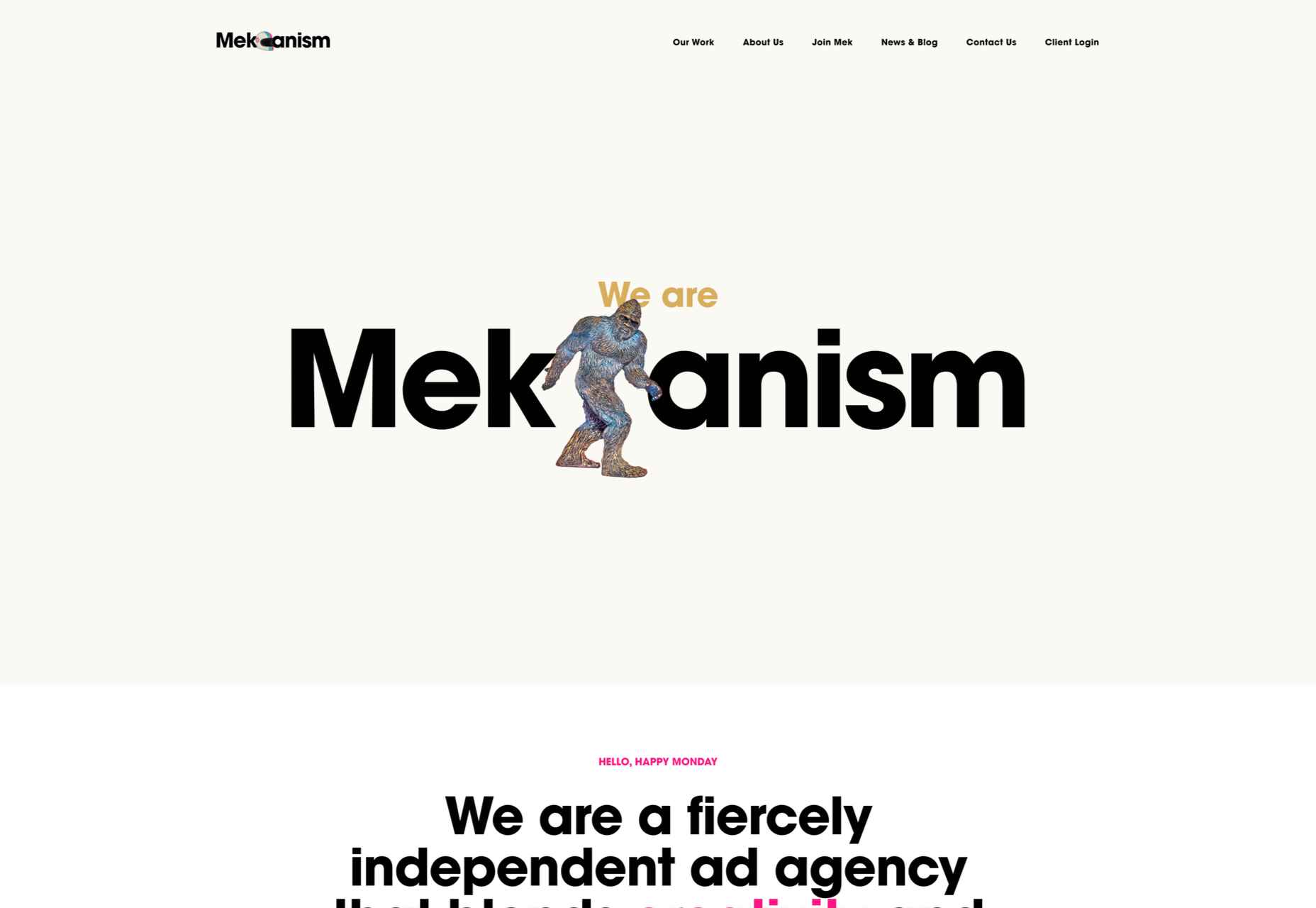

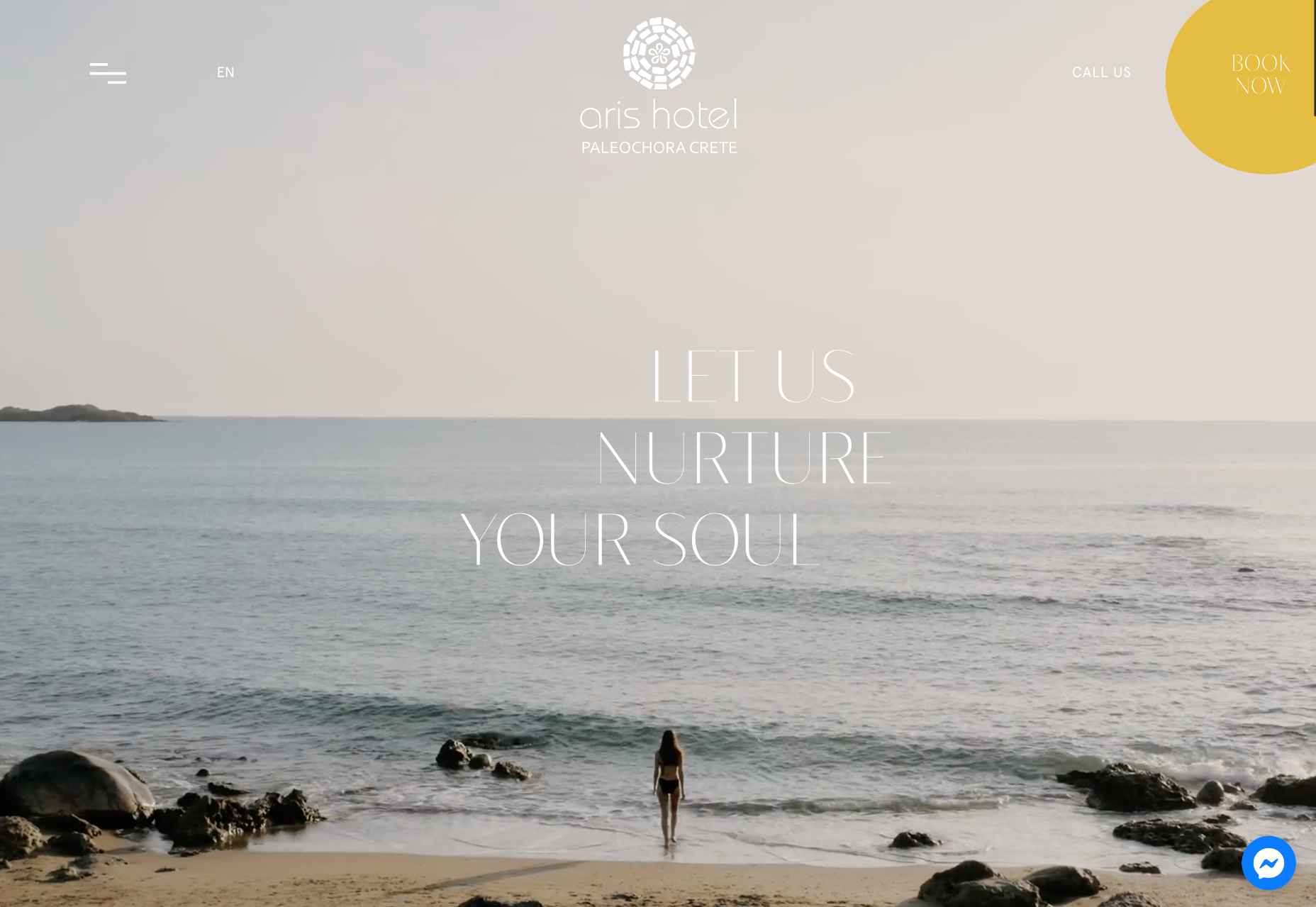

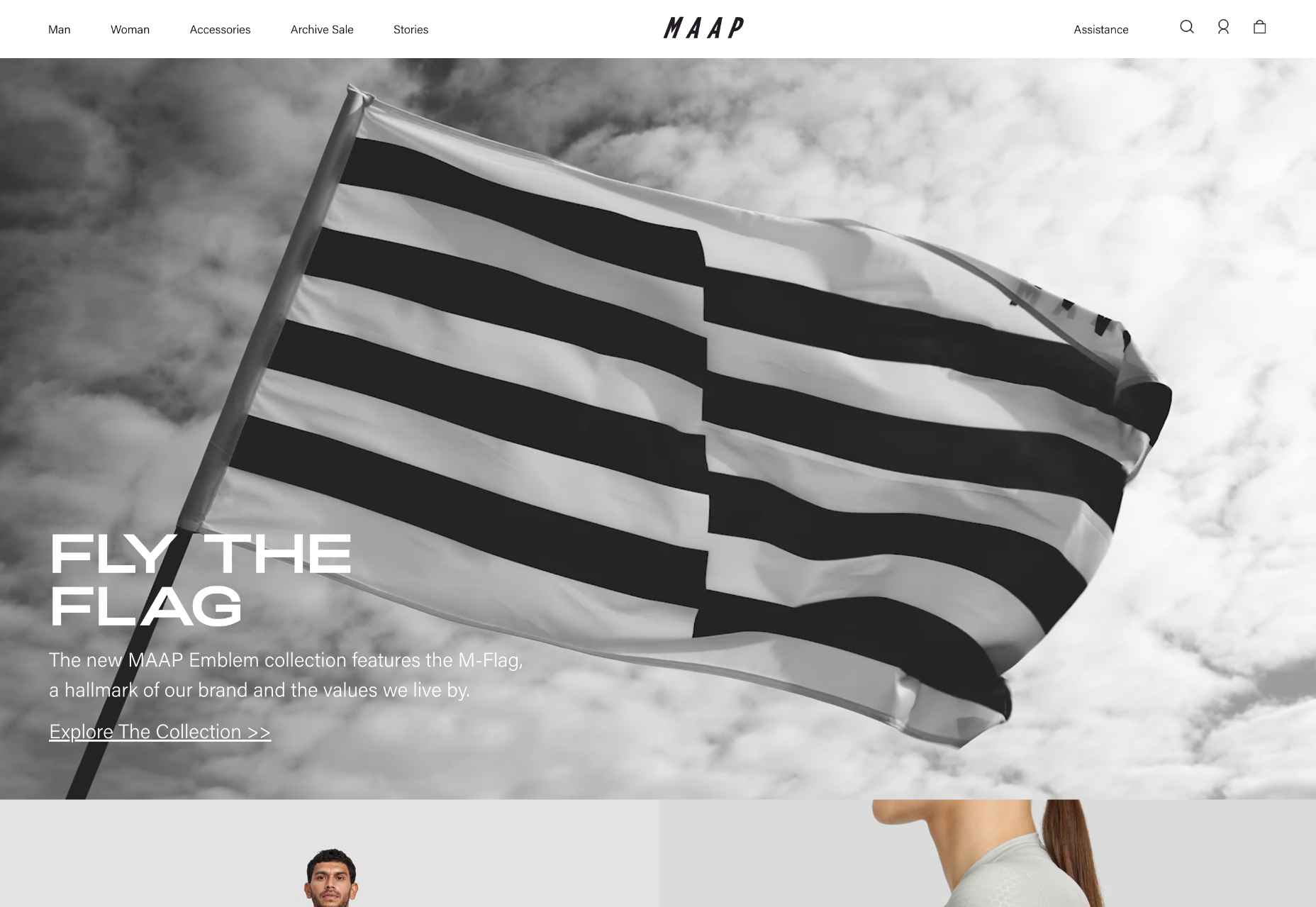

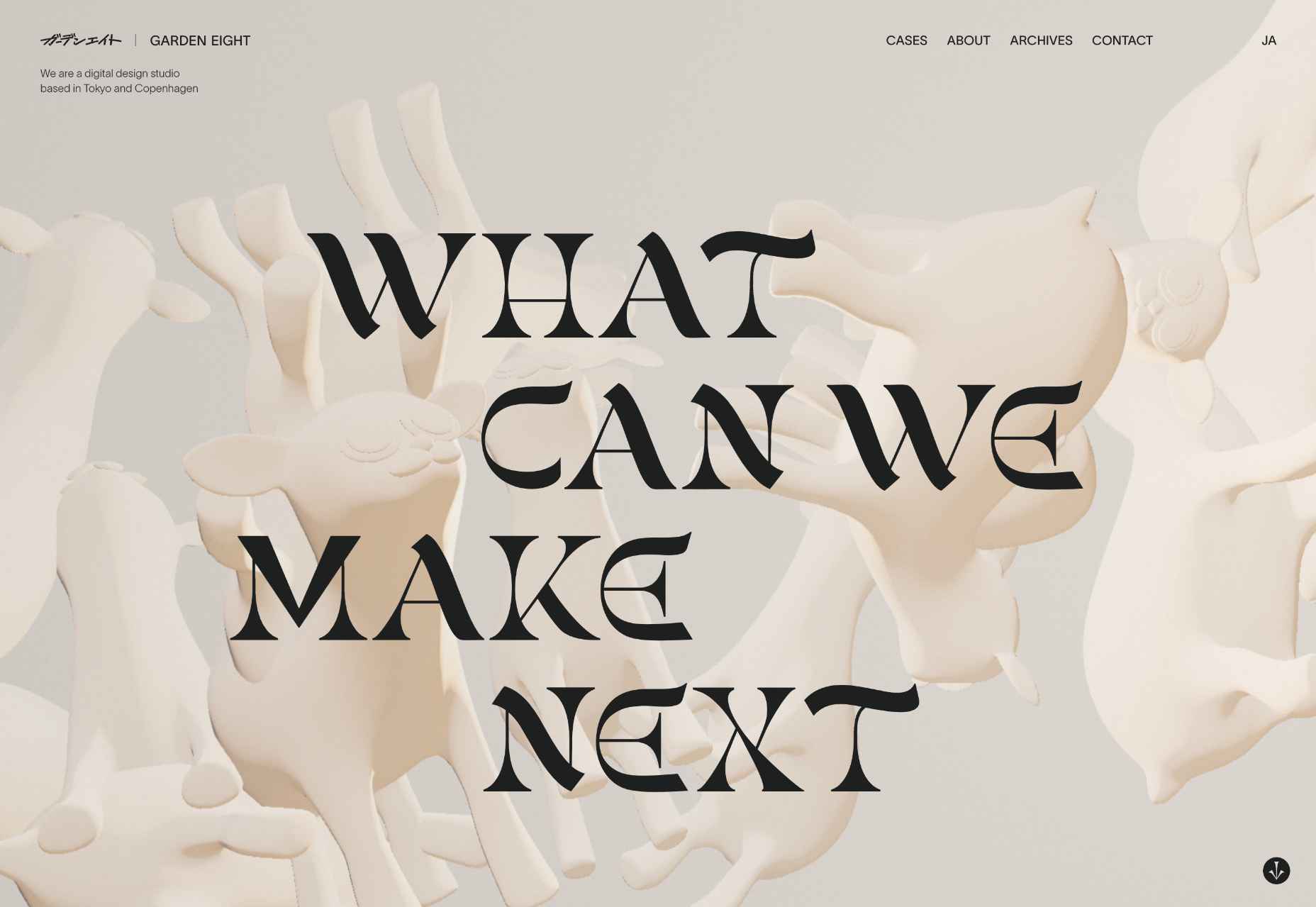

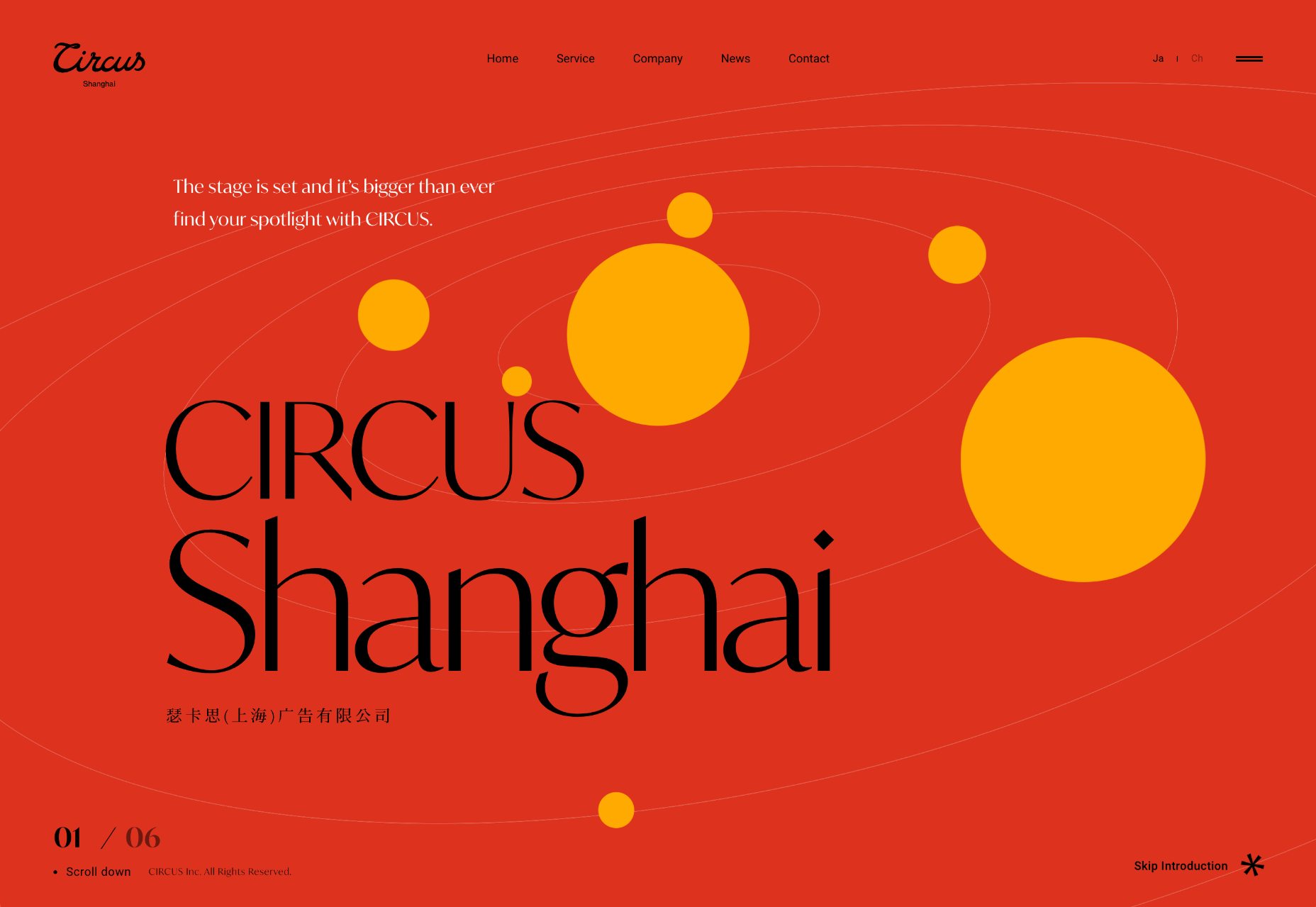

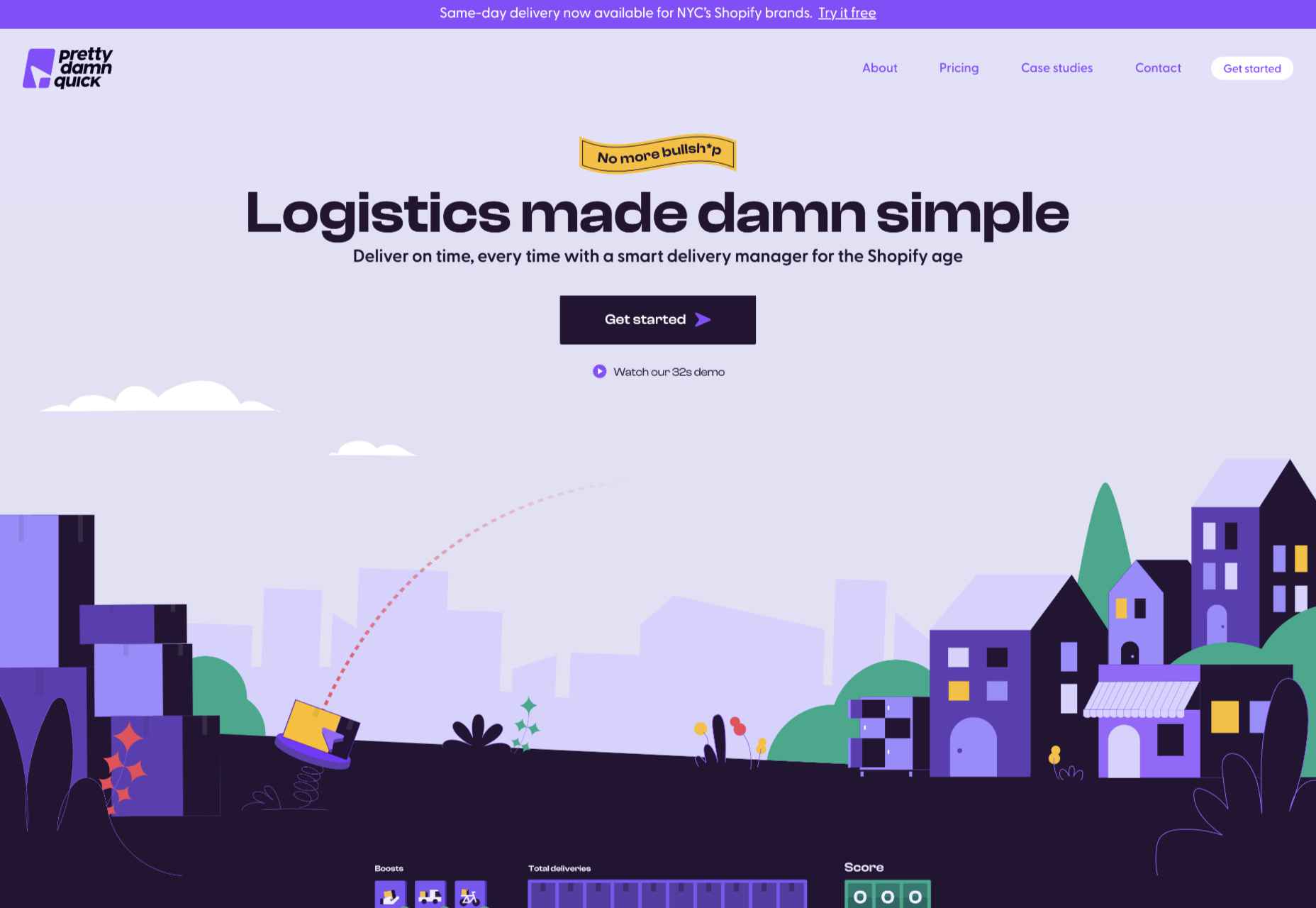

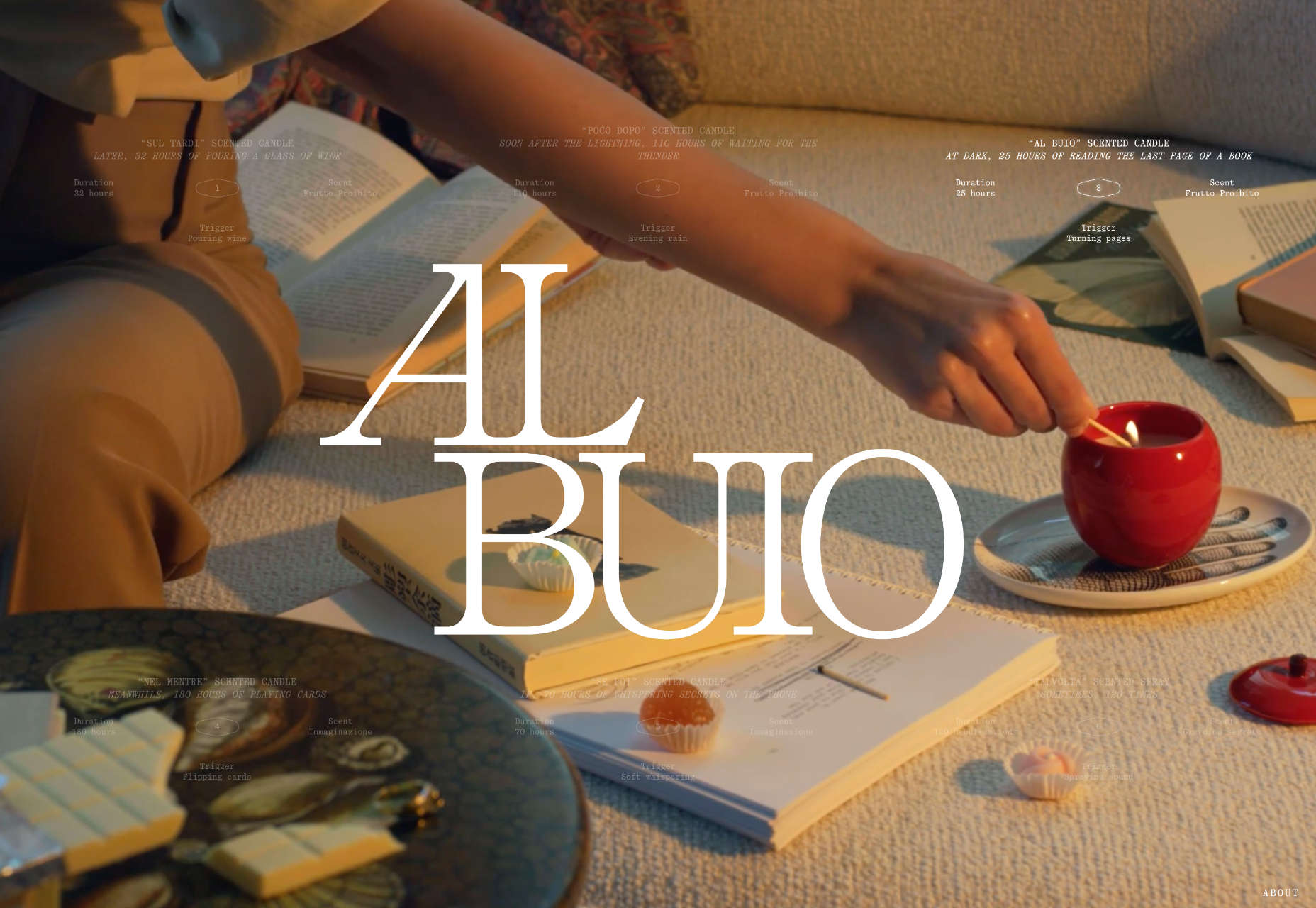

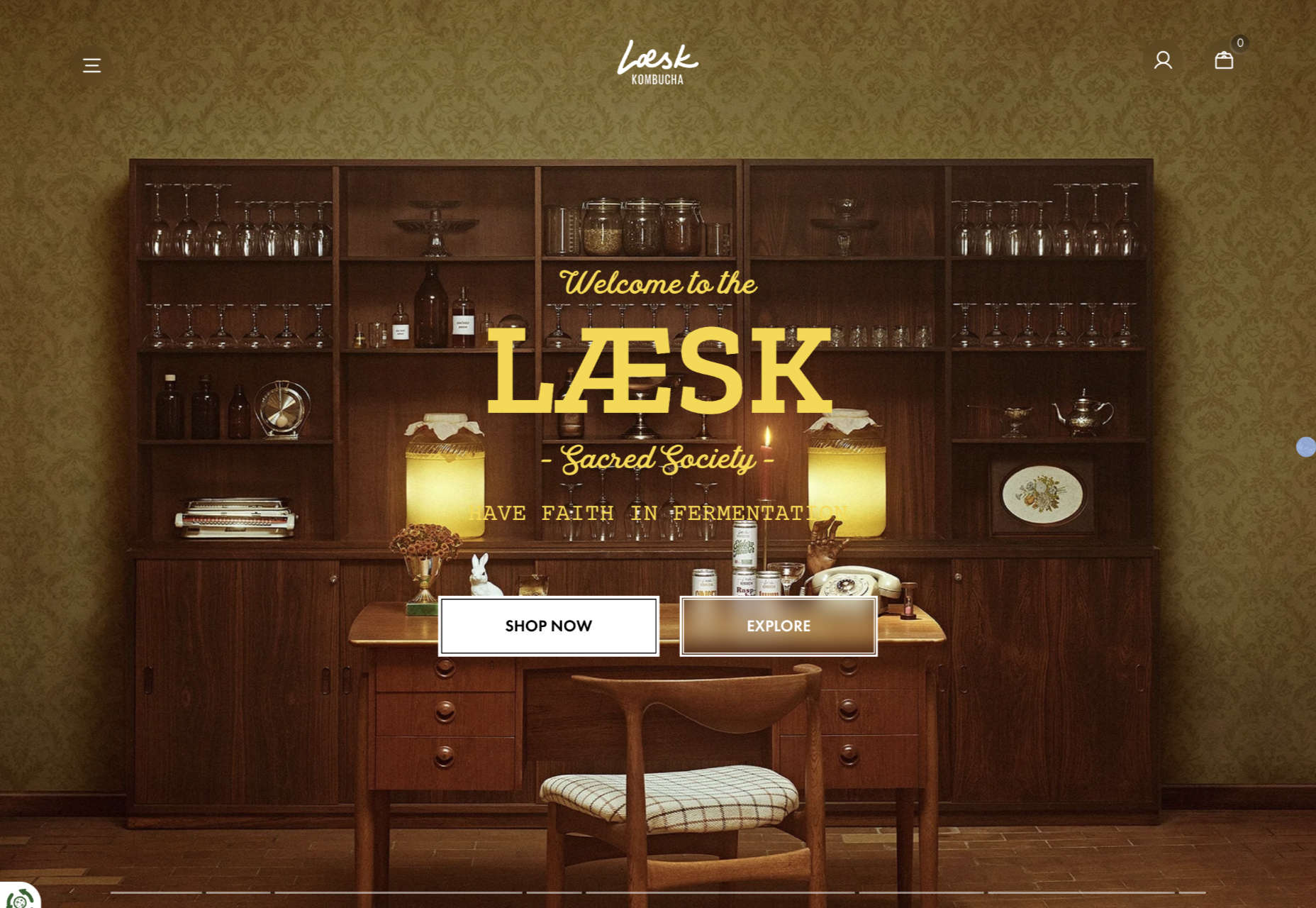

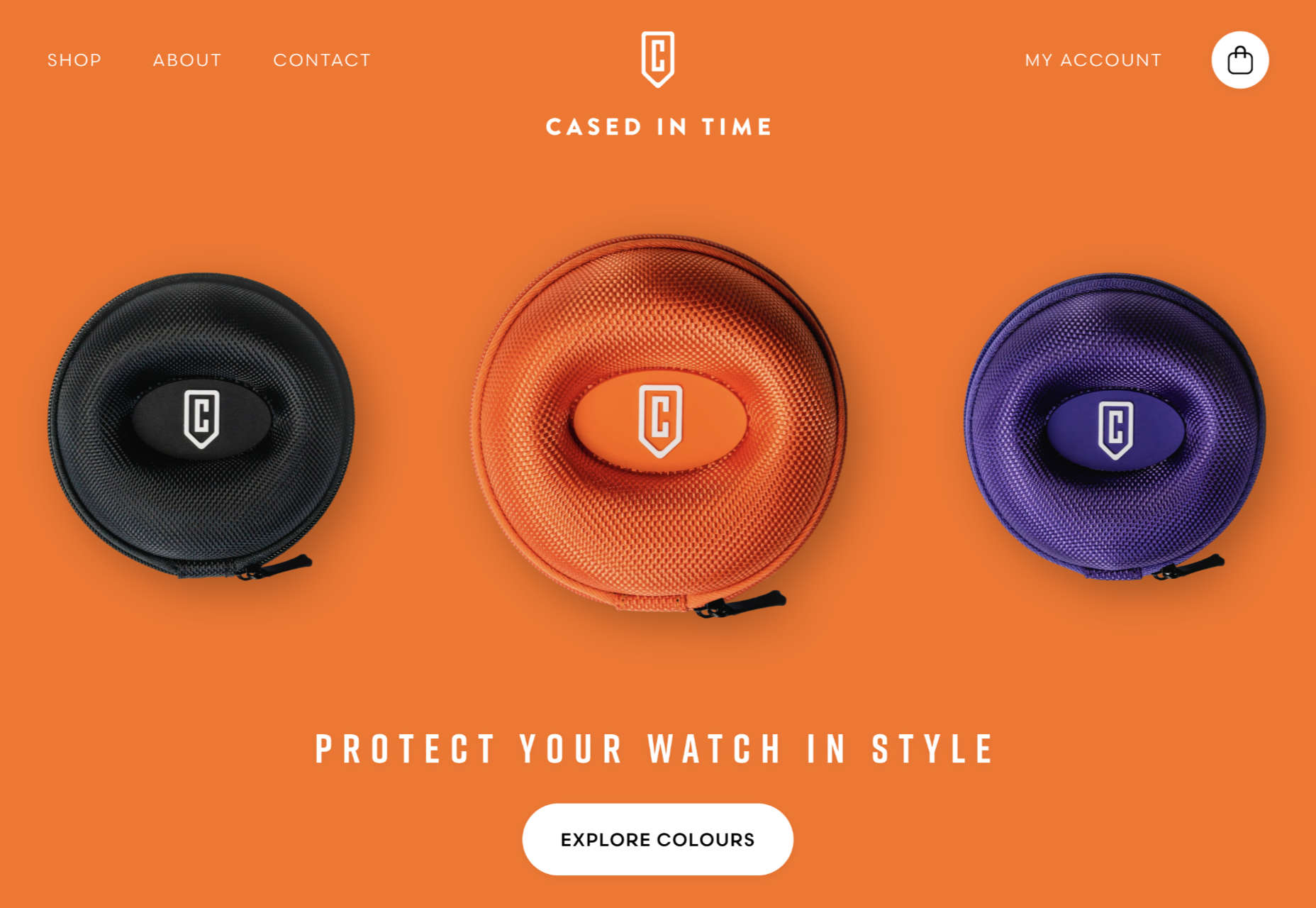

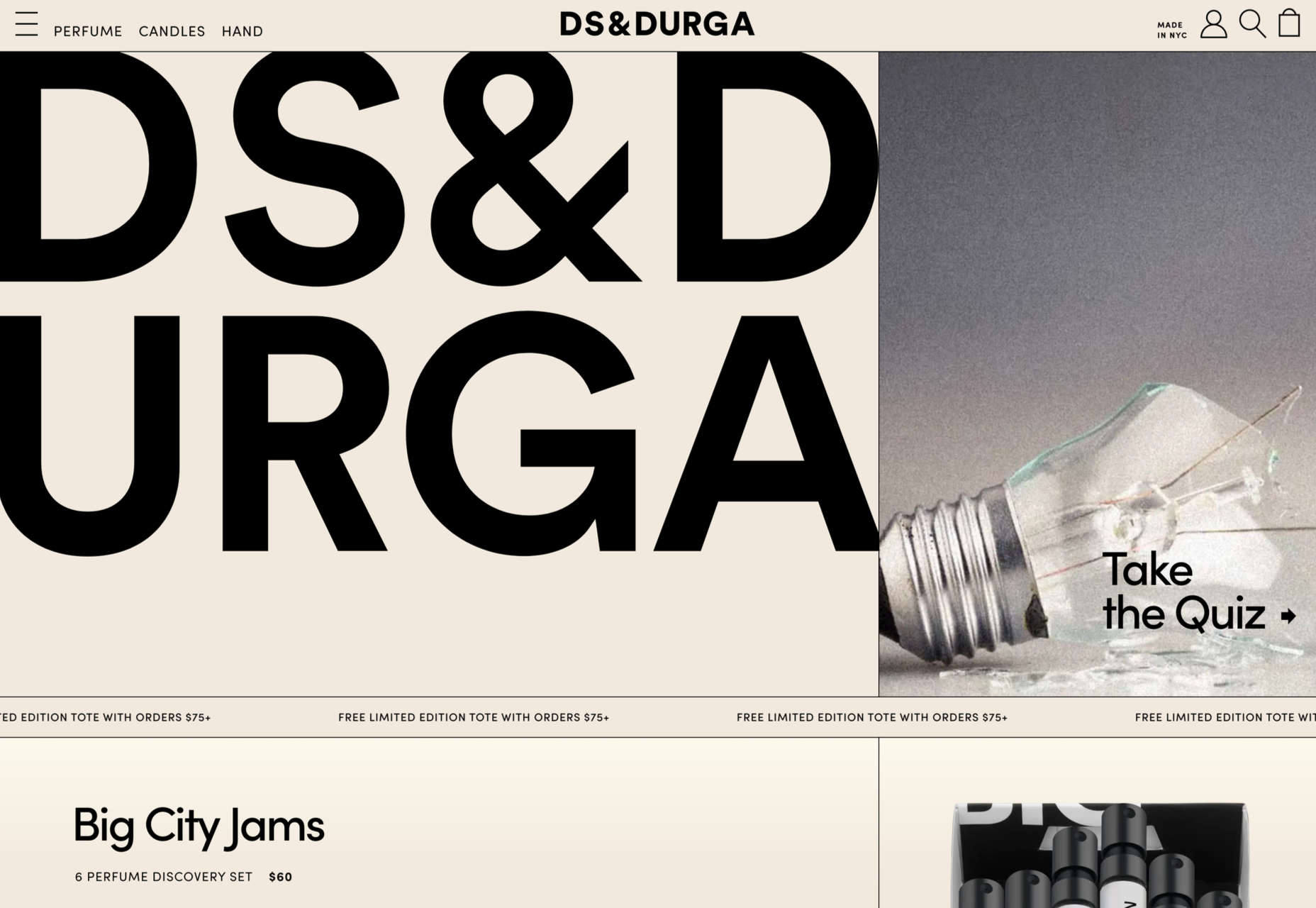

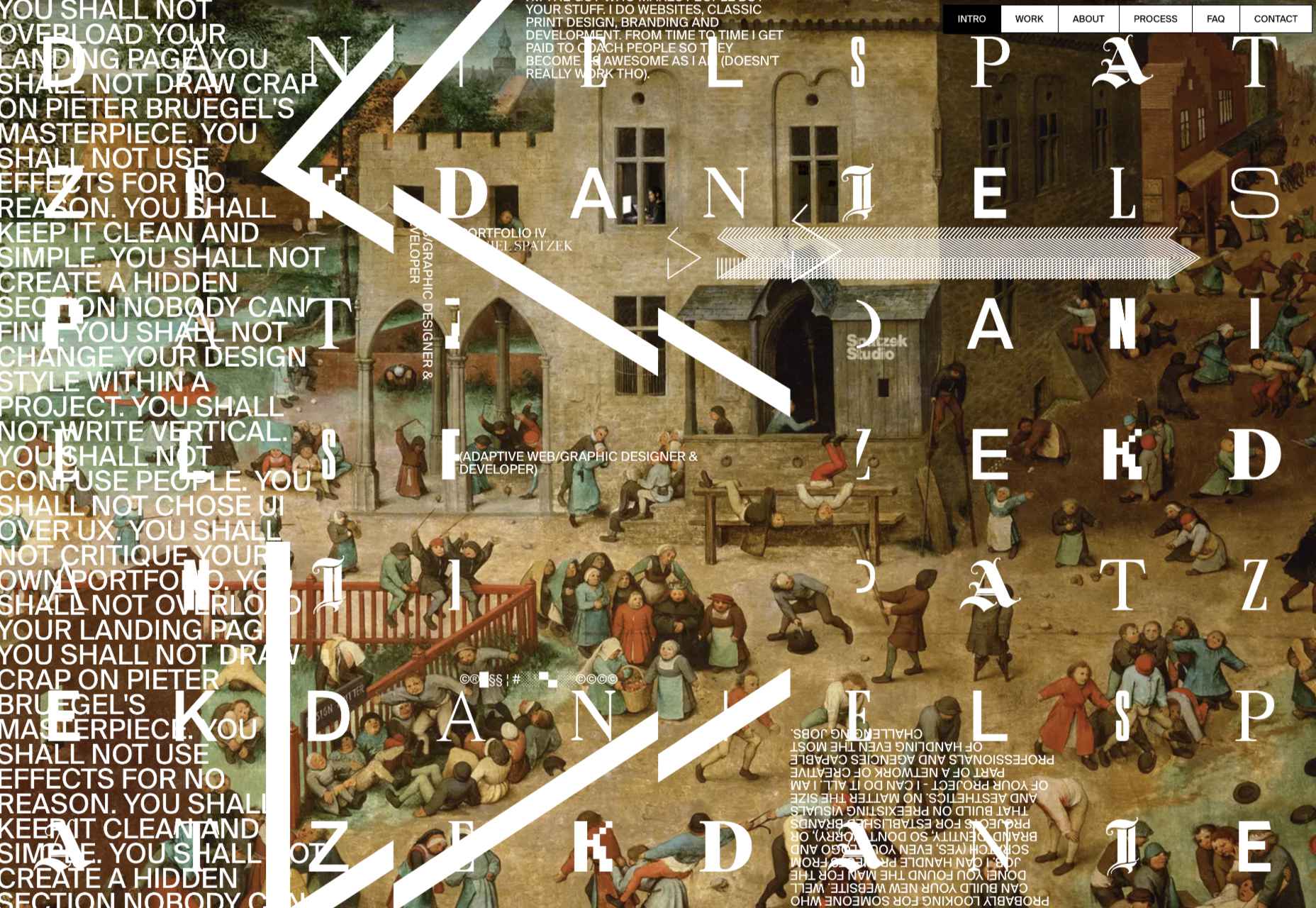

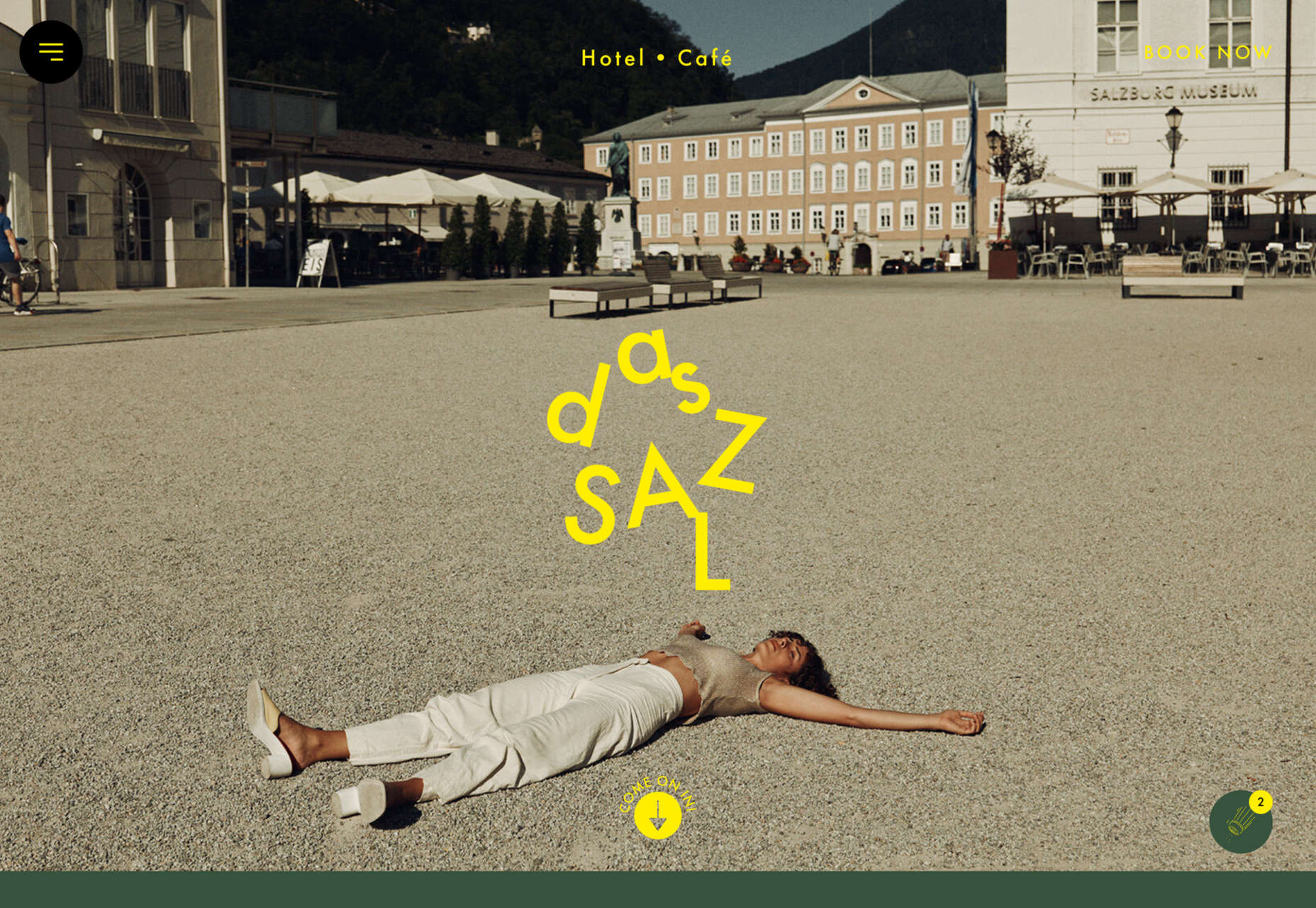

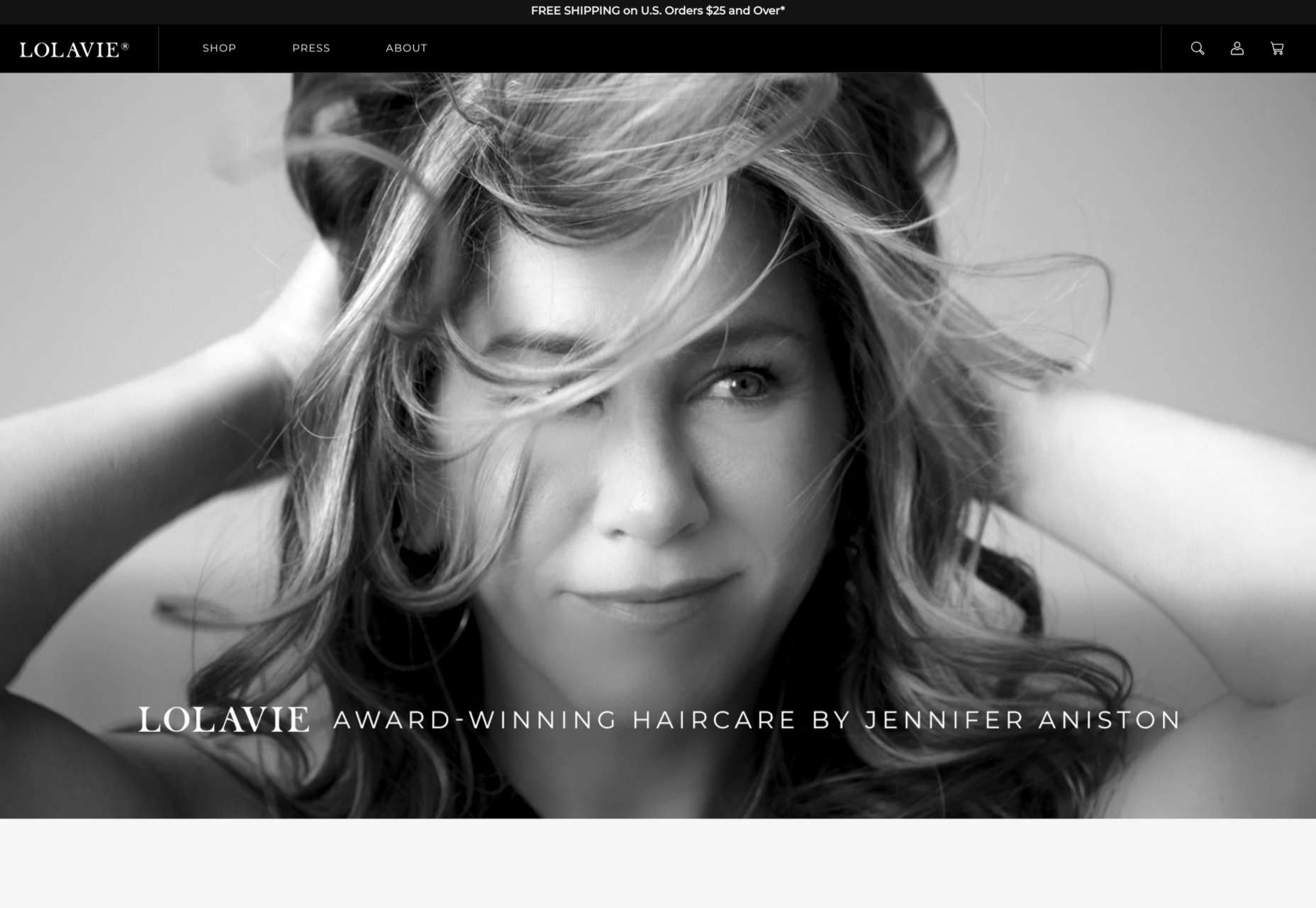

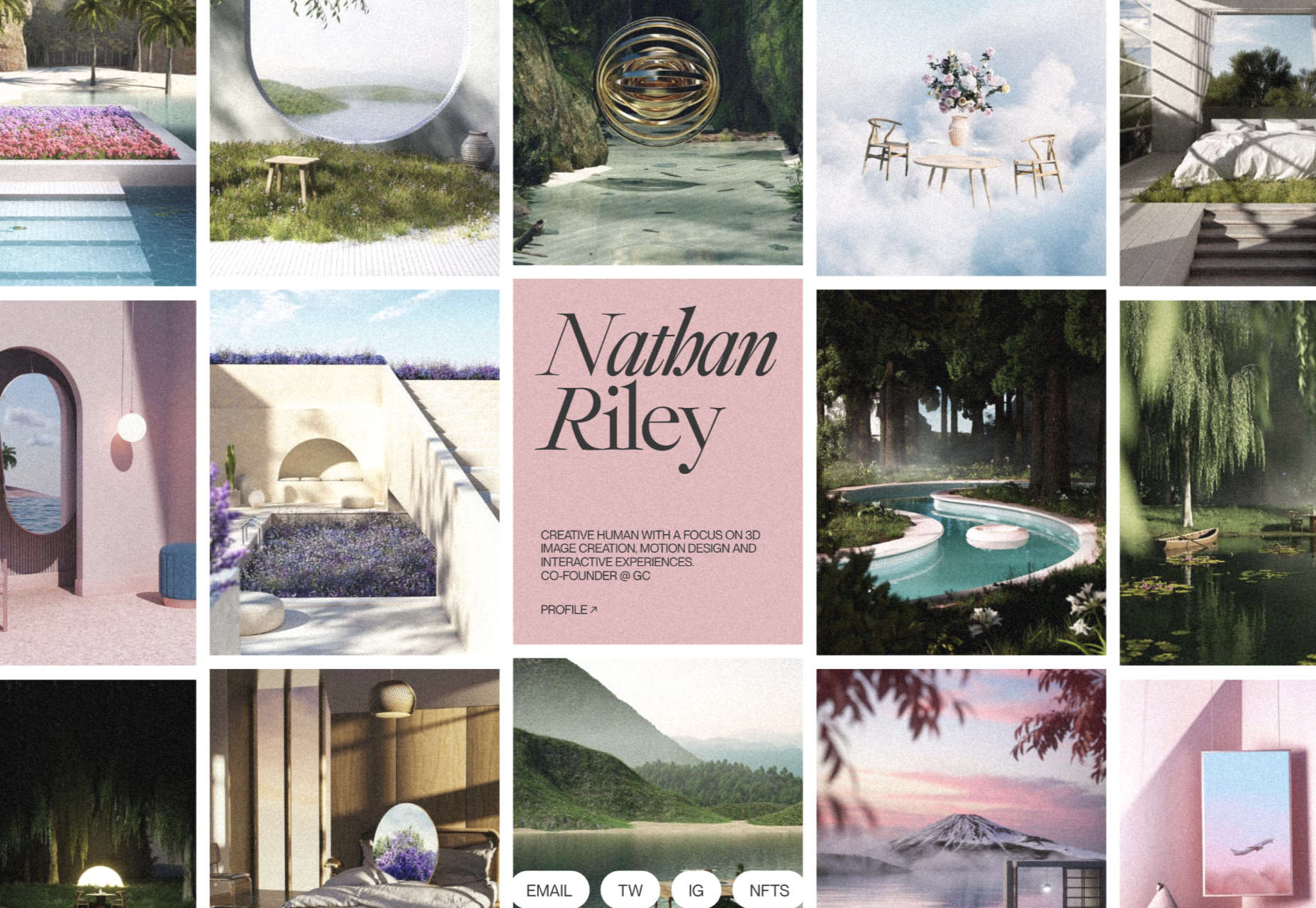

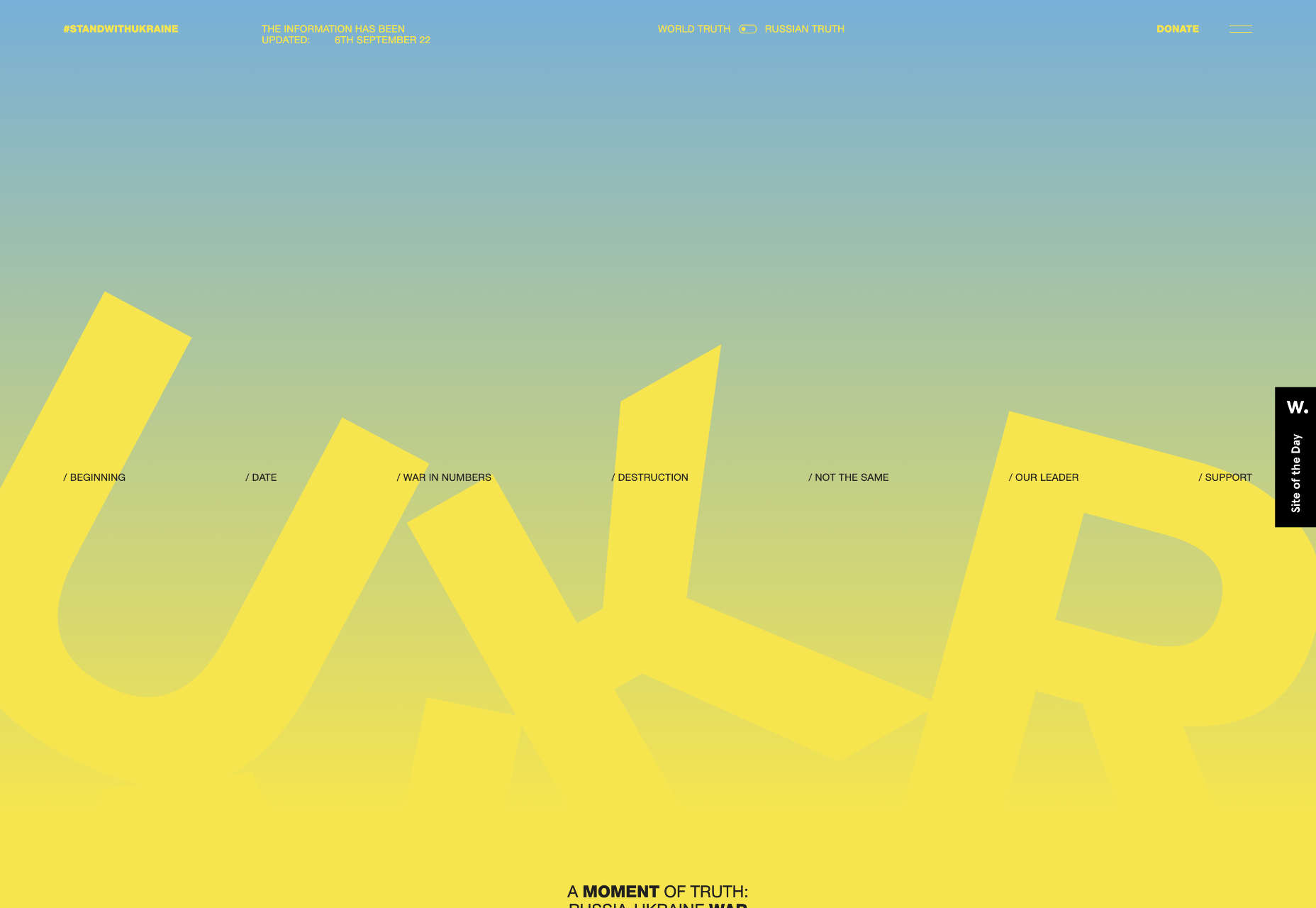

Next, designers tried to put ChatGPT to the test to generate design ideas. They were positively surprised at the “wizard” and “time machine” approaches. They then turned to DALL·E 2 to generate visuals. Obviously, some additional work in UI design tools is still necessary before the ideas can be presented. See the samples generated below.

Socializing

Socializing calls for the designer to form a recommendation, convey the recommendation, and respond to feedback.

-

Forms a recommendation: Theoretical capacity

A designer should develop a point of view on the ideas presented. AI models have attained the ability to sort options based on scoring. By using datasets that track design and attention, AI models can be trained to evaluate and rank design options according to their potential for improving conversions. However, the ability of AI models to evaluate more subjective, emotionally-charged objectives has yet to be proven.

-

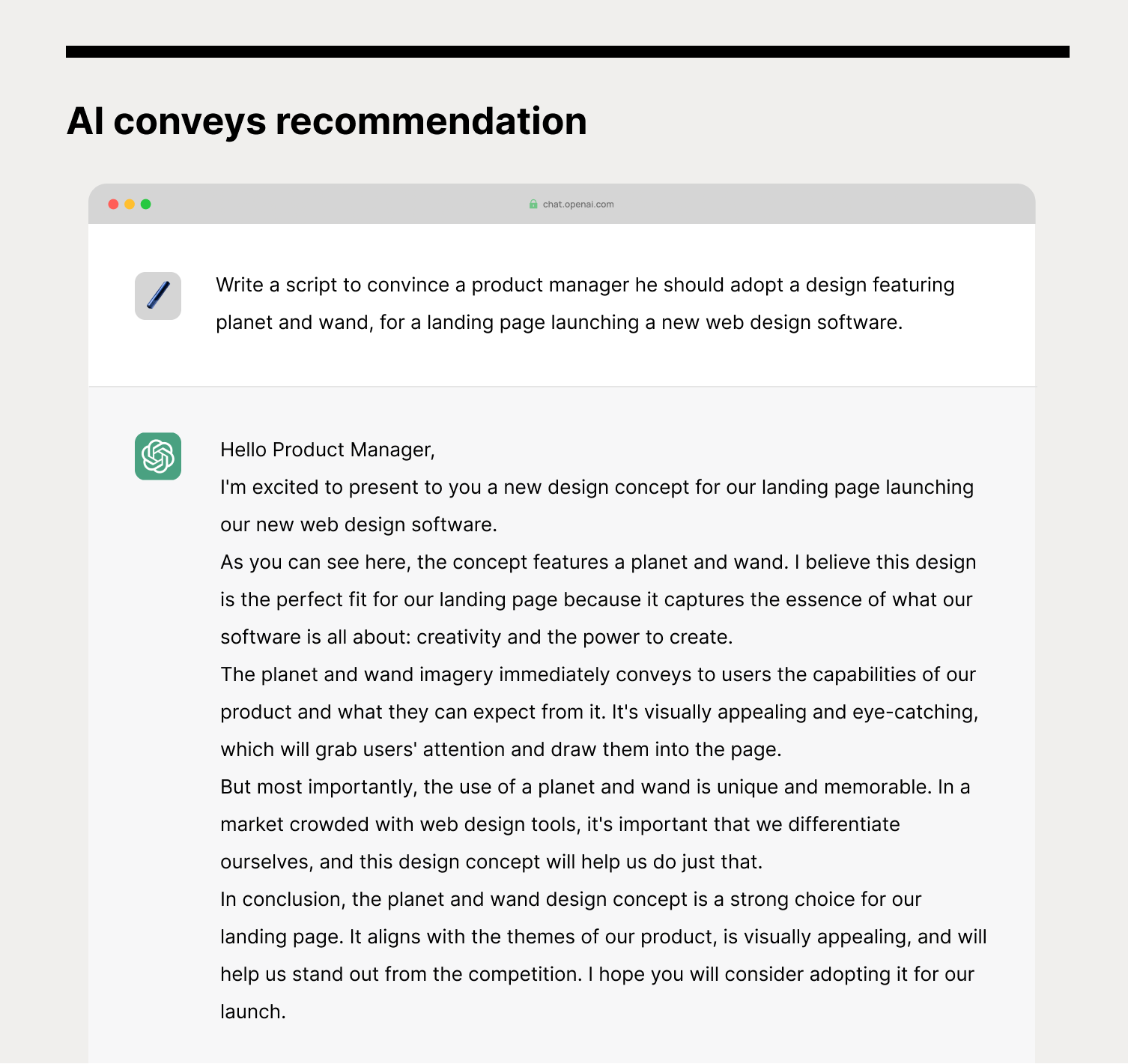

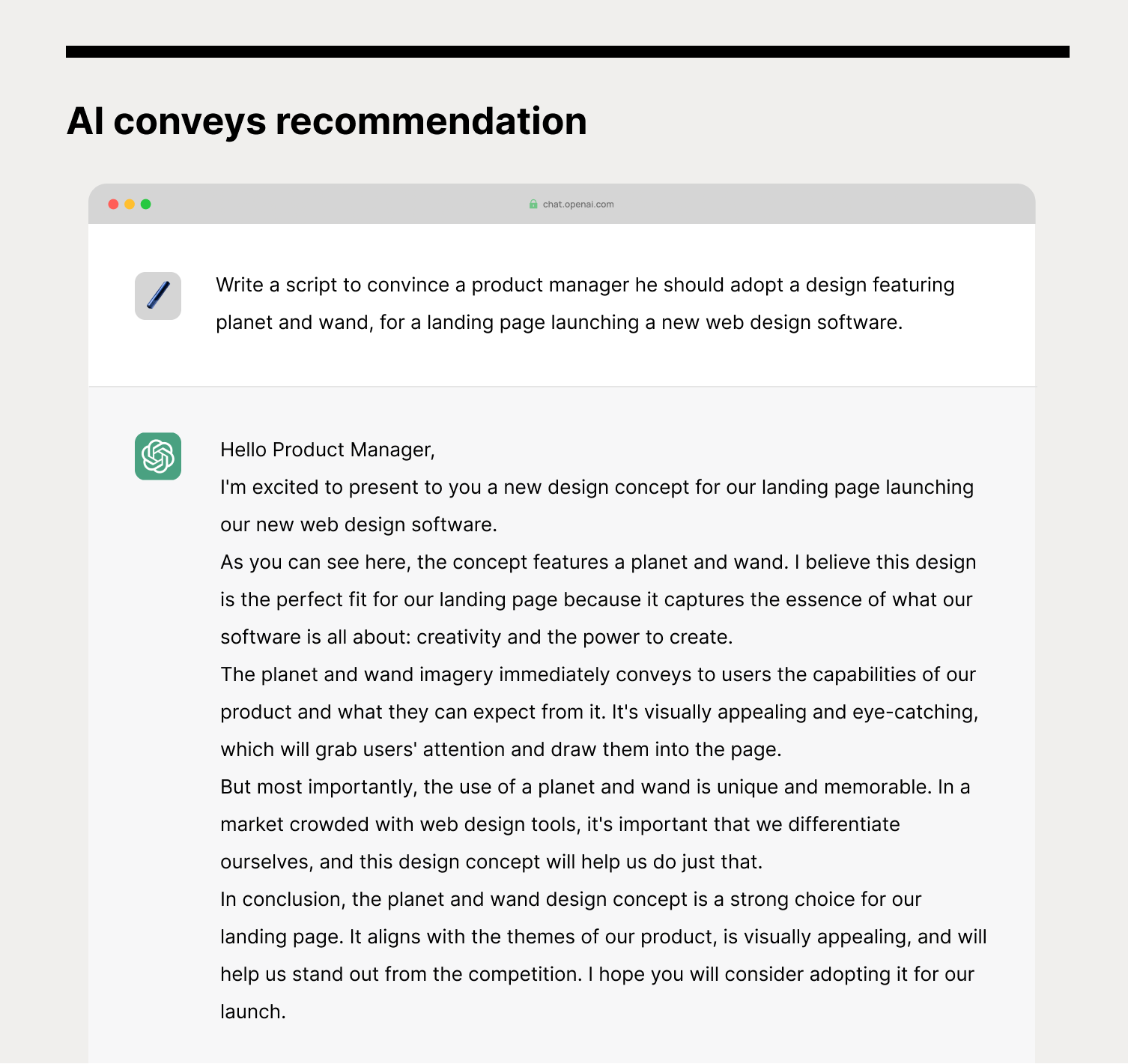

Conveys recommendation: Theoretical capacity

A designer should provide a persuasive narrative to aid the client’s decision. AI models have proven to be capable of creating persuasive narratives that can aid in decision-making, much like a human. For example, IBM Research’s Project Debater is able to generate relevant arguments that support positions held. However, the ability of AI models to strike a balance between assertiveness and overbearingness in practical use cases remains an area of study.

-

Updates based on received feedback: Theoretical capacity

A designer should take in the client’s feedback as a source of input for course correction. AI models like DALL·E 2 and ChatGPT are able to adapt and improve their output based on feedback. By updating the input prompts with feedback, these models are able to generate more accurate, aligned outputs. In cases where the feedback includes new or unrecognized concepts, textual inversion techniques can be used to help the model learn and incorporate these concepts into its output.

The AI Experiment

Designers

Designers gathered the latest design ideas, prioritized them by aesthetic intuition and conversion best practices, and prepared a review deck.

AI

With AI, designers sorted the earlier ideas from DALL·E 2 through models trained on design and attention data. The model provided designers with a simulated course of gaze, giving them confidence in a particular idea. However, they would still like to put it through an actual usability test if selected. They then enlisted ChatGPT to generate a script to sell the idea. With feedback received, they updated the prompt to DALL·E 2. Designers agreed that the ability to quickly and mostly effortlessly iterate felt productive.

Implementing

Implementing calls for the designer to complete designs, author code, and compile both into a functioning website.

-

Completes designs: Theoretical capacity

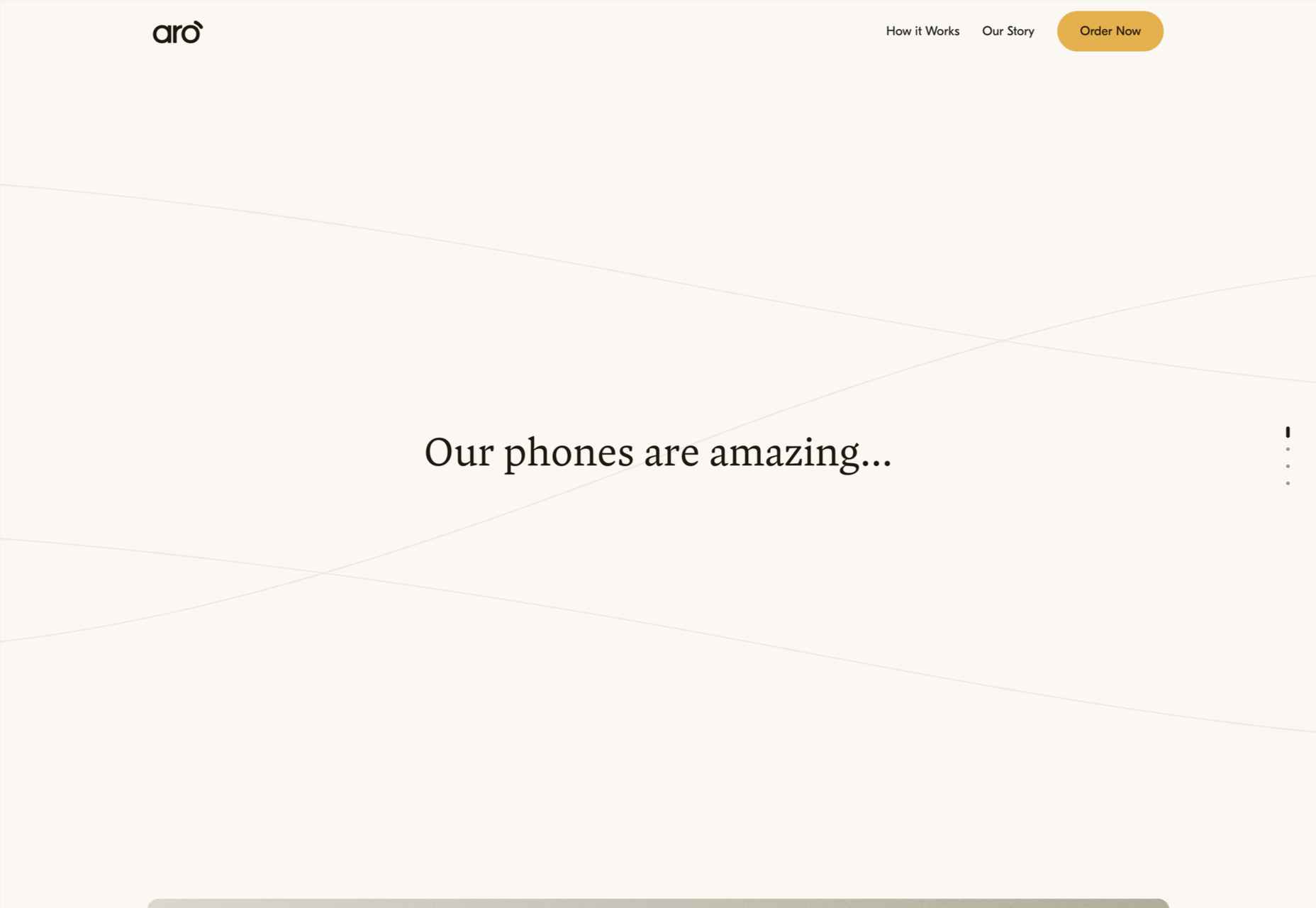

Creative directions should be fleshed out based on the aligned decision. Today’s AI models are capable of completing designs based on textual or pictorial input. These AI models use machine learning techniques to identify connections between input prompts and outputs and, based on your input instance, interpolate a completed design output. Under research, there are already models which can return medium-fidelity mockups by detecting and brushing up UI elements on low-fidelity sketches. In deployment, OpenAI’s Outpainting feature allows extensions of original designs, producing stunning results such as extensions of Johannes Vermeer’s Girl with a Pearl Earring scene. The ability to automatically generate web page designs based on the style of a specific section from a design proposal isn’t too far-fetched, given the demonstrated capabilities of current models.

-

Authors code: Theoretical capacity

HTML, CSS, and JavaScript should be produced to realize the design. Today’s AI models have shown early capabilities to produce code from functionality descriptions. This capability is made possible as these models have been trained on large amounts of data that demonstrate the relationship between descriptions of functionality and code that implements it. By learning from this data, AI models are able to generate code that accurately implements the desired functionality. Models in use today include the assistive feature in Microsoft’s PowerApps software, where the feature turns natural language into ready-to-use code for querying. At GitHub Next, which researches emerging technologies in software development, its VP predicts in the next couple of years, coders “will just be sketching the architectural design, (where) you’ll describe the functionality, and the AI will fill in the details.” Although output from models today still requires human review, the implementation of feedback loops is expected to lead to a continual improvement in quality.

-

Compiles design and code: Theoretical capacity

For compilation, design and code need to be aligned to complete the chosen idea. As AI models possess the above-mentioned design and coding capabilities, automatic generation and alignment may not be too far-fetched. In a recent interview, engineers at OpenAI have already demonstrated technologies that let anyone produce simple apps just by describing what they want, such as “Make me a personal website with PayPal embedded for payments.” This “Gutenbergian” future, in which anyone with an idea can bring it to fruition, is on the brink of erupting.

The AI Experiment

Designers

Designers fine-tuned the design, handed them off to developers and went through two rounds of reviews.

AI

With AI, designers called in developers and worked together to try code-generation services available today. Both designers and developers were surprised complete syntaxes were generated and agreed the experience felt futuristic. However, they were not comfortable with deploying the code as-is and would like to further explore its compatibility with their existing codebase.

A Glimpse Of The Future

The advent of technology in the realm of design is a well-known phenomenon, and designers have long been at the forefront of leveraging its potential to innovate and push the boundaries of their craft. Just as in the late 15th century the rise of the printing press has encouraged scribe artists, in the 19th century textile machines encouraged artisans, and more recently, photo-editing software has encouraged darkroom artists to shift their creative focus, it is not far-fetched to expect a similar shift triggered by AI in the 21st century.

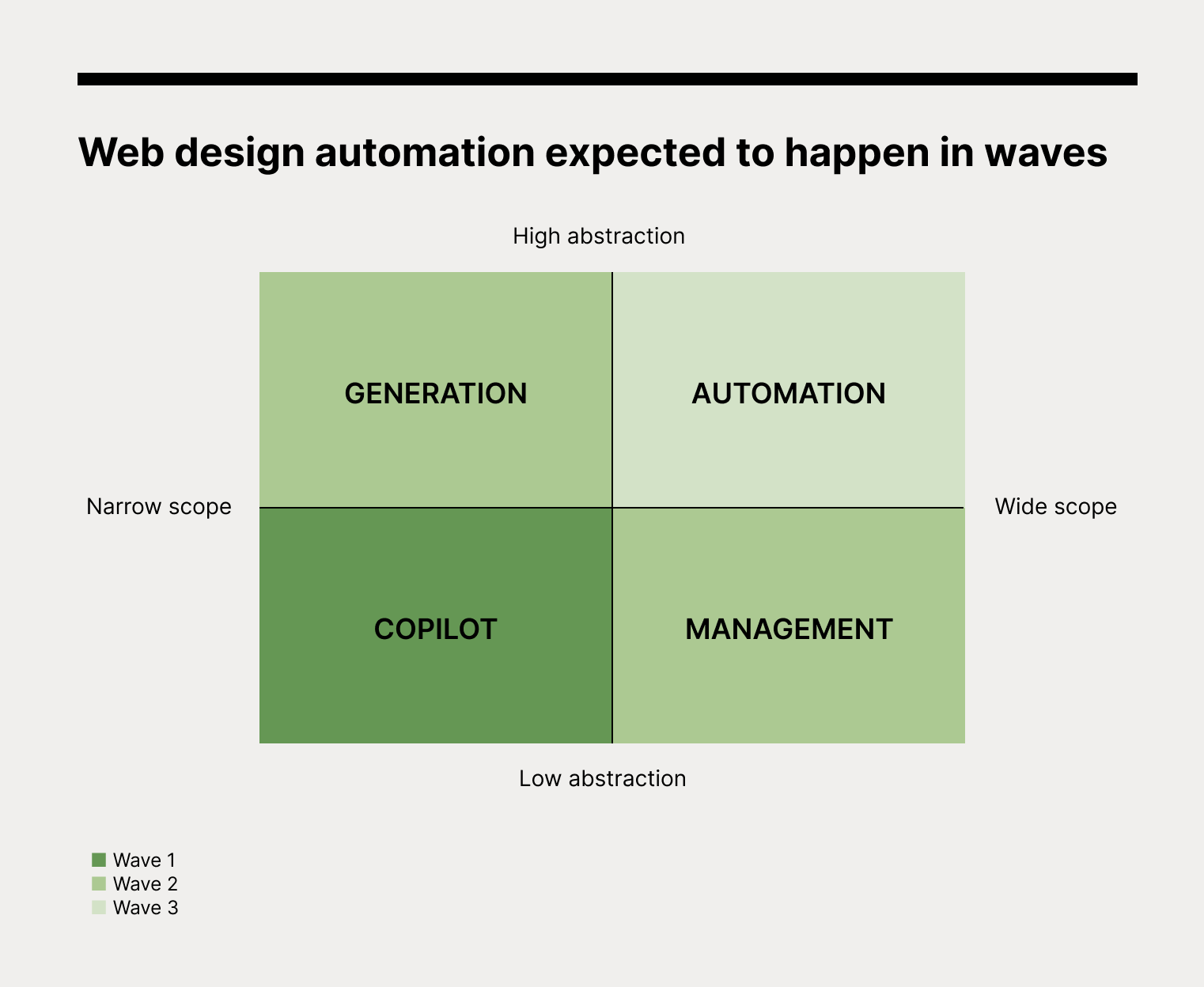

As we consider AI’s potential to take on various tasks throughout the web design process, it is evident that later stages of the design process will be particularly susceptible to automation. Accordingly, productive designers will shift to focus their creativity on earlier stages in order to differentiate from replaceable tasks.

Day-to-day activities will move from pixel-pushing and software operation to strategizing and forming intents with clients.

The future of creativity is heading upstream.

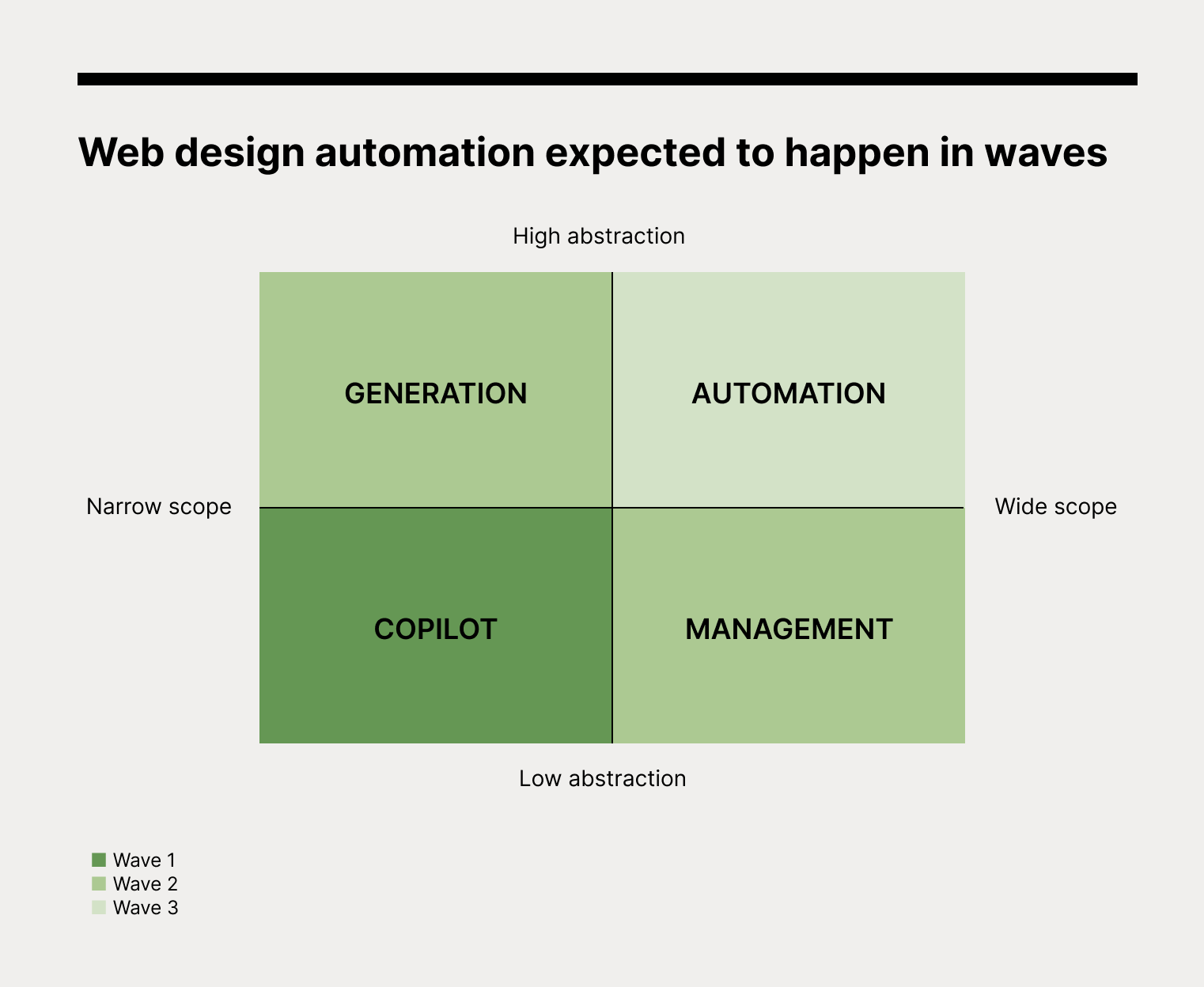

We don’t expect this creative shift to happen overnight but gradually in three waves. While the AI models outlined have demonstrated signs of capabilities related to web design, for trusted deployment, the models will need to be trained with additional industry data. The quantity of training data will help the models develop higher accuracy toward addressing the field’s most abstract and generalized problems. Considering abstraction and scope, we will expand on the discussed automation of the web design process by forecasting effects on a time scale. With the ability to measure, we hope this will help practitioners manage the approaching future.

Wave 1: Design Copilot

The wave refers to the ability of AI models to assist designers in once manual and time-consuming tasks. These tasks will mainly be of low abstraction within a narrow scope. This specificity requires less training data, and the controlled output domain will allow AI models to meet expectations consistently. We are currently at the onset, with technology previews from Adobe and upstarts like us. Plausible examples in the future may include tools helping designers to automatically adapt one design for different screen sizes, implement suggested animations to make designs interactive, or complete technically-complex format adjustments with descriptive prompts.

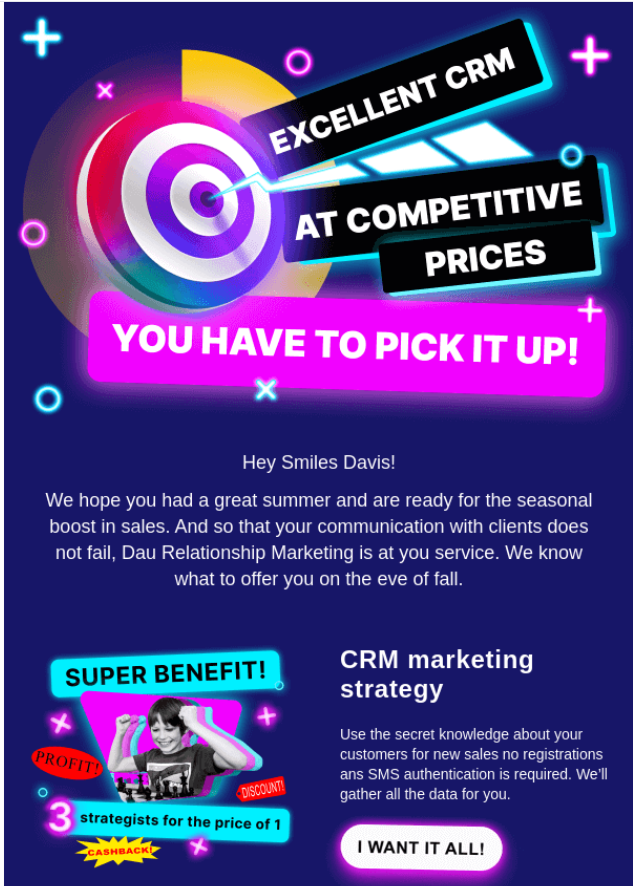

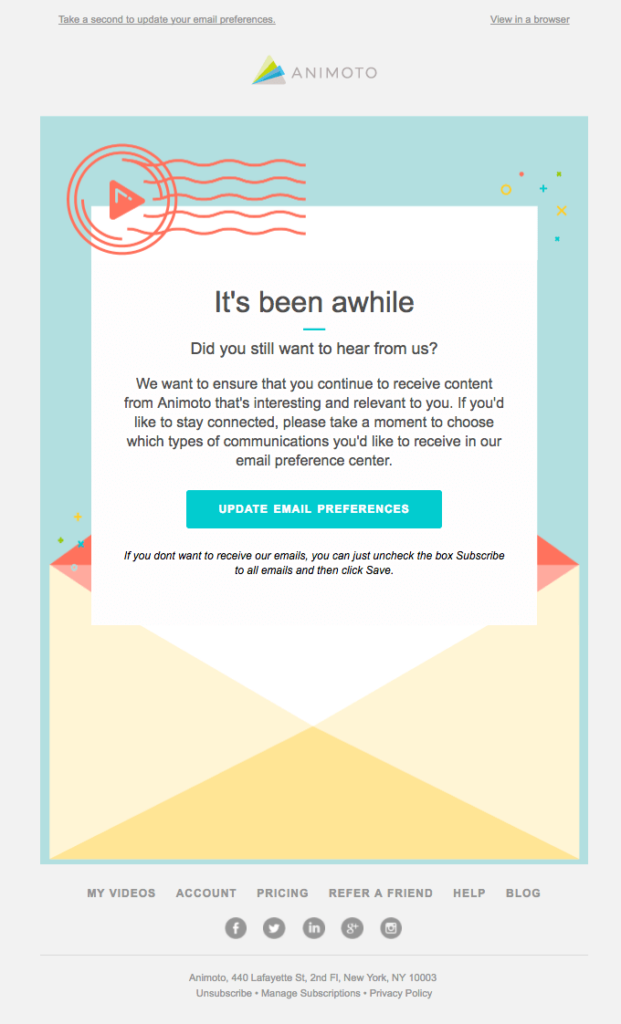

Wave 2: Generation and Management

The next wave refers to the ability of AI models to generate semi-completed web designs based on prompts, as well as assist in client relationship management. Generation tasks include Ideating and Implementing output ideas, which involve higher abstractions in a narrow scope. While existing models like ChatGPT and DALL·E 2 are already capable of generating design suggestions and outputs as images, additional pattern-learning with web design-specific datasets will be required to improve variation and quality. Furthermore, there are still concerns that must be addressed, such as issues related to copyright and ethics.

On the other hand, Management tasks include Defining and Socializing ideas, which involve lower abstraction despite a wider scope. While use cases in adjacent industries have proven successful, implementation in everyday account management will require further oversight. For example, the ability to strike a balance between persuasion and tactful communication during the process will need additional monitoring.

Wave 3: Automation

The third wave refers to end-to-end automation of the web design process, including support of strategy and intent development in the Forming stage. There have been attempts at a leapfrog, including AI modules in website builders. However, it will take additional time and effort to overcome the hurdles mentioned earlier, particularly the ability to incorporate uniquely human perspectives, such as empathy, before AI can fully replace a designer’s contribution.

Your Next Step

As AI enters the world of design, it opens up a whole new realm of possibilities. Applications such as generative models are already demonstrating some theoretical capabilities and even practical applications across multiple stages of web design.

While AI still lacks uniquely human capabilities, such as inquisitiveness and empathy, opportunities abound for designers to collaborate with technology to unlock new levels of creativity. Like a brush stroke on an empty canvas, designers and AI have the potential to create something truly extraordinary. Together, they will paint a brighter future for the world of design.

Interested in leveraging AI in your web designs today? Sign up today!